Automotive SPICE

Quality Management in the Automotive Industry

Automotive SPICE®

Process Reference Model

Process Assessment Model

Version 3.1

Title: |

Automotive SPICE Process Assessment / Reference Model |

Author(s): |

VDA QMC Working Group 13 / Automotive SIG |

Version: |

3.1 |

Date: |

2017-11-01 |

Status: |

PUBLISHED |

Confidentiality: |

Public |

Revision ID: |

656 |

Copyright notice

This document is a revision of the Automotive SPICE process assessment model 2.5 and the process reference model 4.5, which has been developed under the Automotive SPICE initiative by consensus of the car manufacturers within the Automotive Special Interest Group (SIG), a joint special interest group of Automotive OEM, the Procurement Forum and the SPICE User Group.

It has been revised by the Working Group 13 of the Quality Management Center (QMC) in the German Association of the Automotive Industry with the representation of members of the Automotive Special Interest Group, and with the agreement of the SPICE User Group. This agreement is based on a validation of the Automotive SPICE 3.0 version regarding any ISO copyright infringement and the statements given from VDA QMC to the SPICE User Group regarding the current and future development of Automotive SPICE.

This document reproduces relevant material from:

ISO/IEC 33020:2015 Information technology – Process assessment – Process measurement framework for assessment of process capability

ISO/IEC 33020:2015 provides the following copyright release statement:

‘Users of this International Standard may reproduce subclauses 5.2, 5.3, 5.4 and 5.6 as part of any process assessment model or maturity model so that it can be used for its intended purpose.’

ISO/IEC 15504-5:2006 Information Technology – Process assessment – Part 5: An exemplar Process Assessment Model

ISO/IEC 15504-5:2006 provides the following copyright release statement:

‘Users of this part of ISO/IEC 15504 may freely reproduce the detailed descriptions contained in the exemplar assessment model as part of any tool or other material to support the performance of process assessments, so that it can be used for its intended purpose.’

Relevant material from one of the mentioned standards is incorporated under the copyright release notice.

Acknowledgement

The VDA, the VDA QMC and the Working Group 13 explicitly acknowledge the high quality work carried out by the members of the Automotive Special Interest Group. We would like to thank all involved people, who have contributed to the development and publication of Automotive SPICE®.

Derivative works

You may not alter, transform, or build upon this work without the prior consent of both the SPICE User Group and the VDA Quality Management Center. Such consent may be given provided ISO copyright is not infringed.

The detailed descriptions contained in this document may be incorporated as part of any tool or other material to support the performance of process assessments, so that this process assessment model can be used for its intended purpose, provided that any such material is not offered for sale.

All distribution of derivative works shall be made at no cost to the recipient.

Distribution

The Automotive SPICE® process assessment model may only be obtained by download from the www.automotivespice.com web site.

It is not permitted for the recipient to further distribute the document.

Change requests

Any problems or change requests should be reported through the defined mechanism at the www.automotivespice.com web site.

Trademark

Automotive SPICE® is a registered trademark of the Verband der Automobilindustrie e.V. (VDA) For further information about Automotive SPICE® visit www.automotivespice.com.

Document history

Version |

Date |

By |

Notes |

|---|---|---|---|

2.0 |

2005-05-04 |

AutoSIG / SUG |

DRAFT RELEASE, pending final editorial review |

2.1 |

2005-06-24 |

AutoSIG / SUG |

Editorial review comments implemented

Updated to reflect changes in FDIS 15504-5

|

2.2 |

2005-08-21 |

AutoSIG / SUG |

Final checks implemented: FORMAL RELEASE |

2.3 |

2007-05-05 |

AutoSIG / SUG |

Revision following CCB: FORMAL RELEASE |

2.4 |

2008-08-01 |

AutoSIG / SUG |

Revision following CCB: FORMAL RELEASE |

2.5 |

2010-05-10 |

AutoSIG / SUG |

Revision following CCB: FORMAL RELEASE |

3.0 |

2015-07-16 |

VDA QMC WG13 |

Changes: See release notes |

3.1 |

2017-11-01 |

VDA QMC WG13 |

Changes: See www.automotivespice.com |

Release notes

Version 3.0 of the process assessment model incorporates the following major changes:

Chapter 1 |

Editorial adaption to ISO/IEC 330xx series, Notes regarding combined PRM/PAM in this document |

Chapter 2 |

Adaption to ISO/IEC 330xx series |

Chapter 3 |

Text optimized for better understanding and adapted to ISO/IEC 330xx series. |

Chapter 4 |

Renaming ENG to SYS/SWE, Structure of old ENG Processes changed, Rework of AS 4.5 process reference model and AS 2.5 process performance indicators focusing on a set of highly significant processes assessed within the automotive industry (VDA Scope). |

Chapter 5 |

Adaption based on AS 2.5 to the measurement framework of ISO/IEC 33020 |

Annex A |

Conformity statement adapted to ISO/IEC 33004 |

Annex B |

Modifications on work product characteristics according to the changes in chapter 4. |

Annex C |

Update to recent standards. Introduction of specific terms used in AS 3.0 |

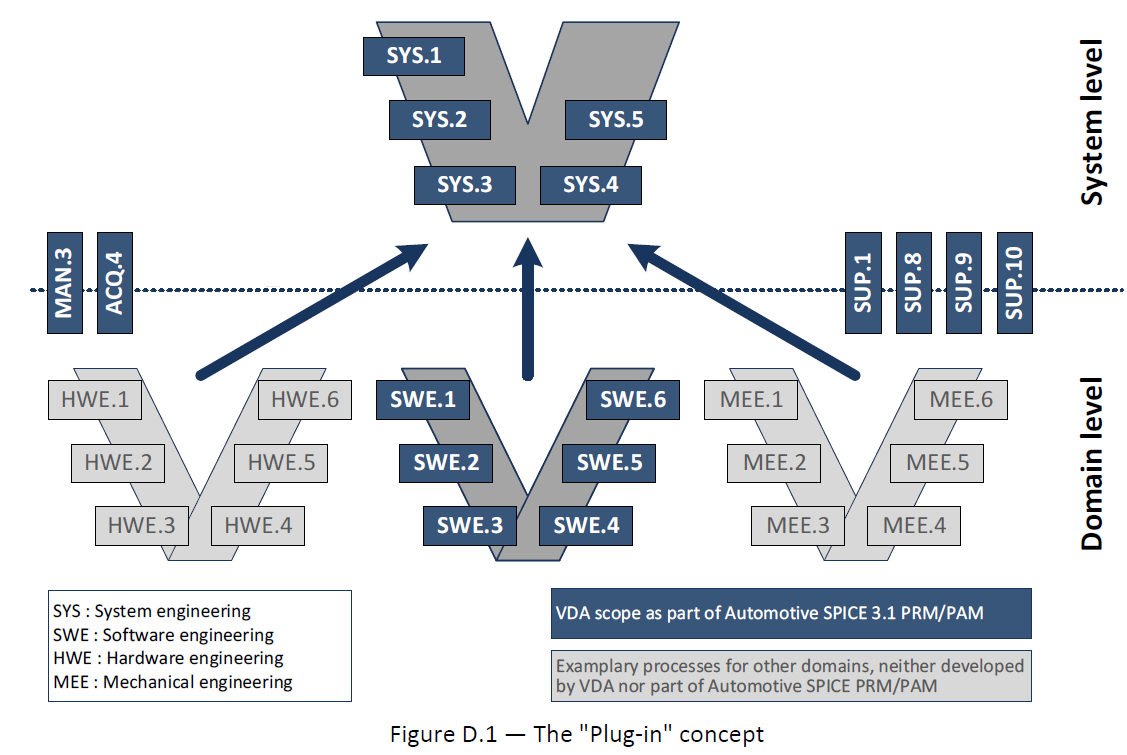

Annex D |

Added the major concepts used for AS 3.0, incorporated Annex E of AS 2.5 |

Annex E |

Updated references to other standards |

Version 3.1 of the process assessment model incorporates minor changes. Please refer to www.automotivespice.com for a detailed change log.

Table of contents

List of Figures

Figure 1 — Process assessment model relationship ………………………………………………………. 11

Figure 2 — Automotive SPICE process reference model - Overview ………………………………………….. 12

Figure 3 — Relationship between assessment indicators and process capability………………………………. 22

Figure 4 — Possible levels of abstraction for the term “process” ………………………………………… 23

Figure 5 — Performing a process assessment for determining process capability …………………………….. 23

Figure D.1 — The “Plug-in” concept …………………………………………………………………… 122

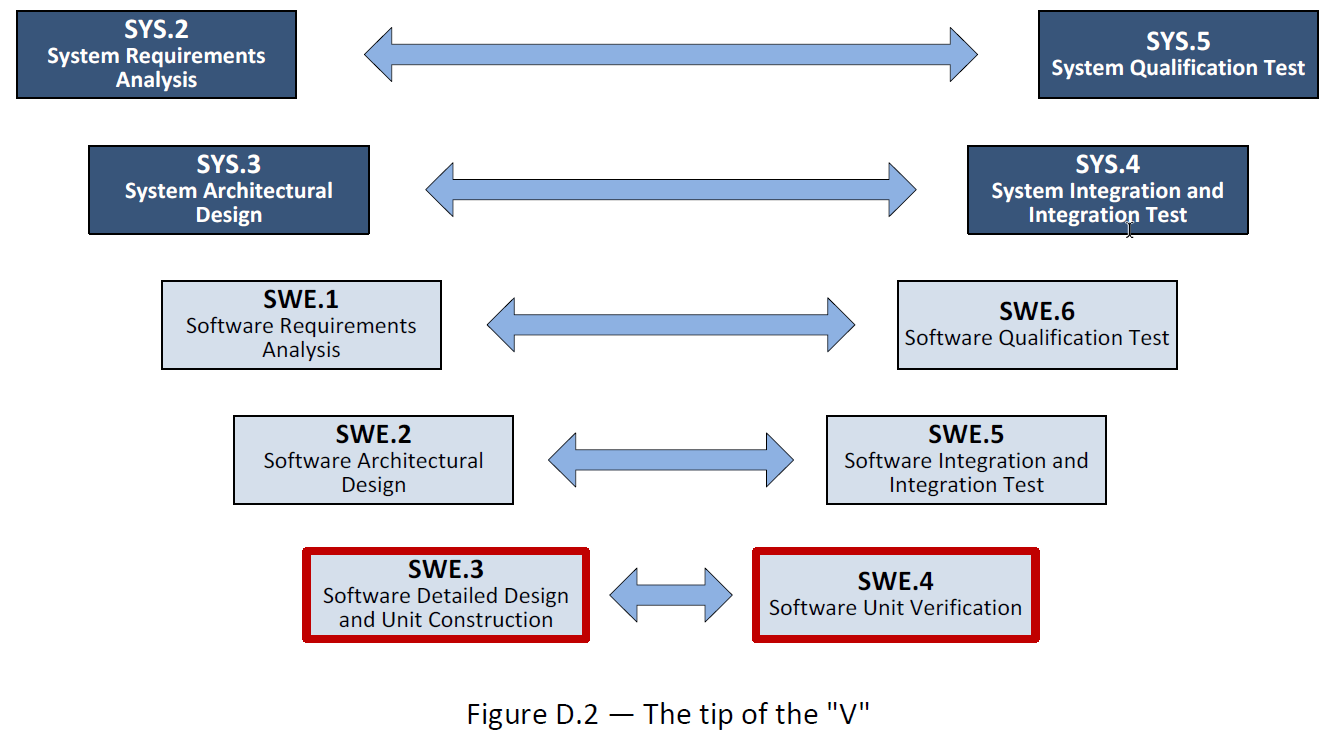

Figure D.2 — The tip of the “V” ……………………………………………………………………… 123

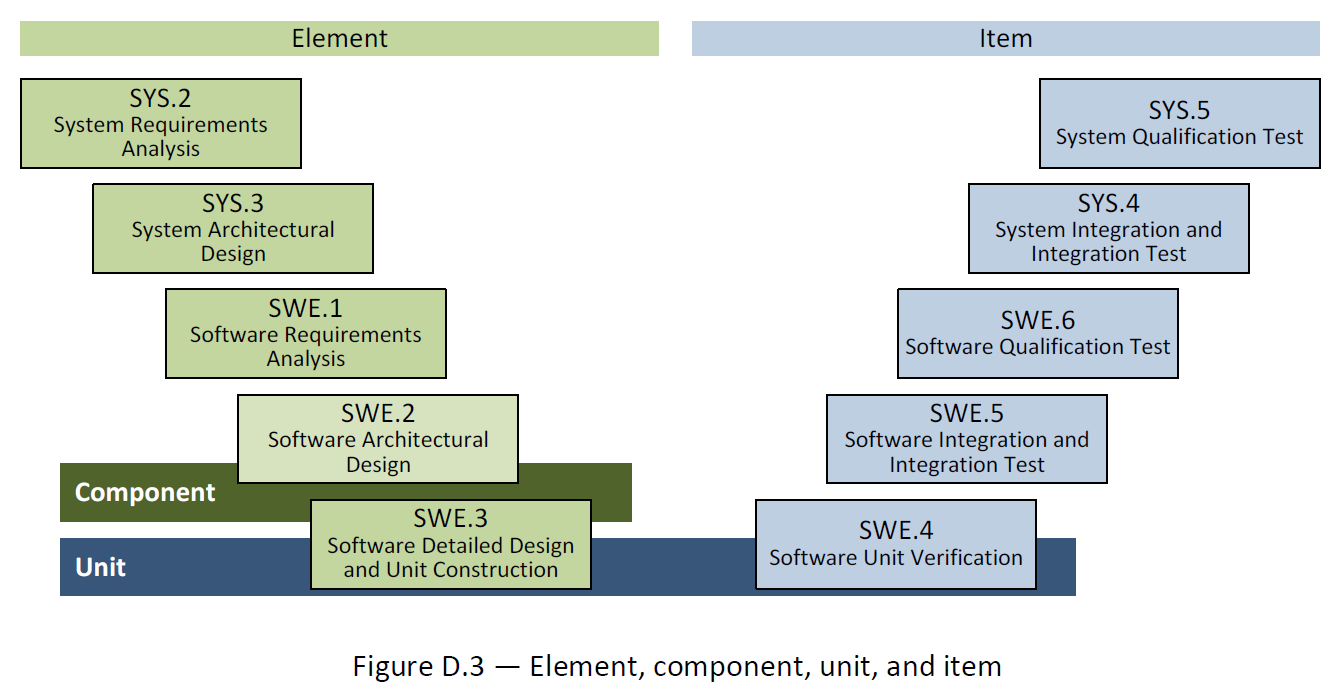

Figure D.3 — Element, component, unit, and item ……………………………………………………….. 123

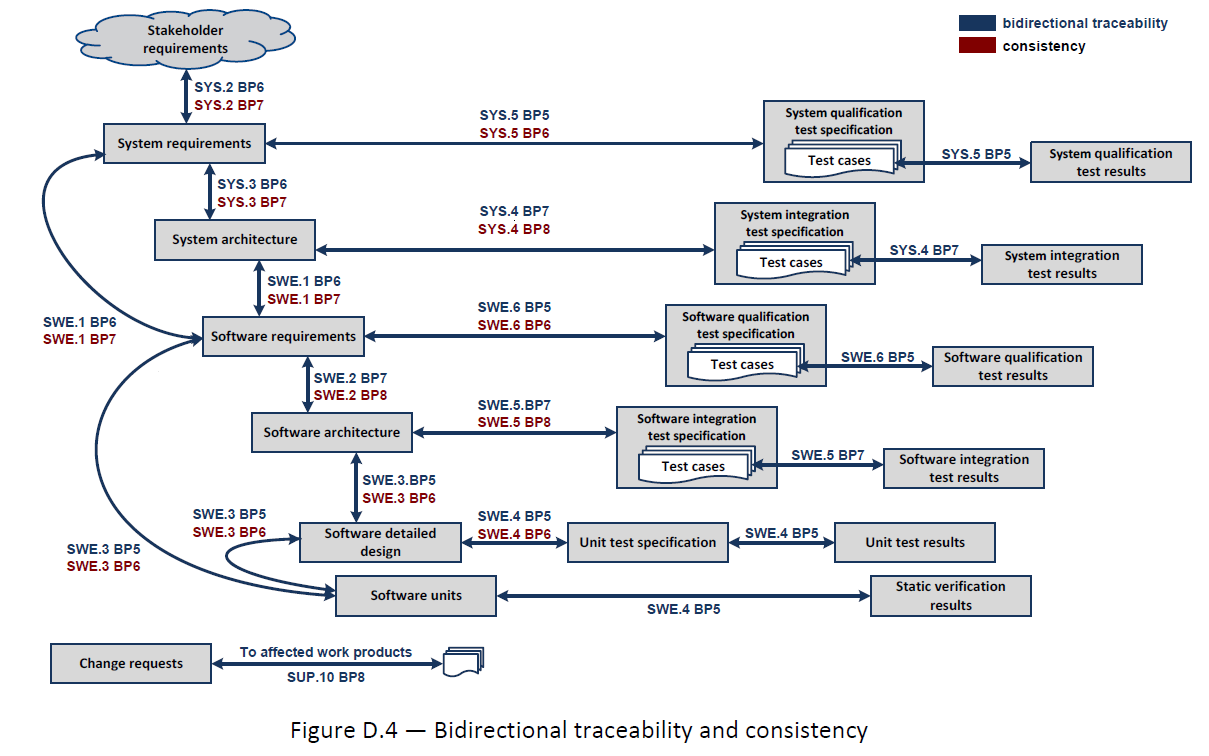

Figure D.4 — Bidirectional traceability and consistency ………………………………………………… 124

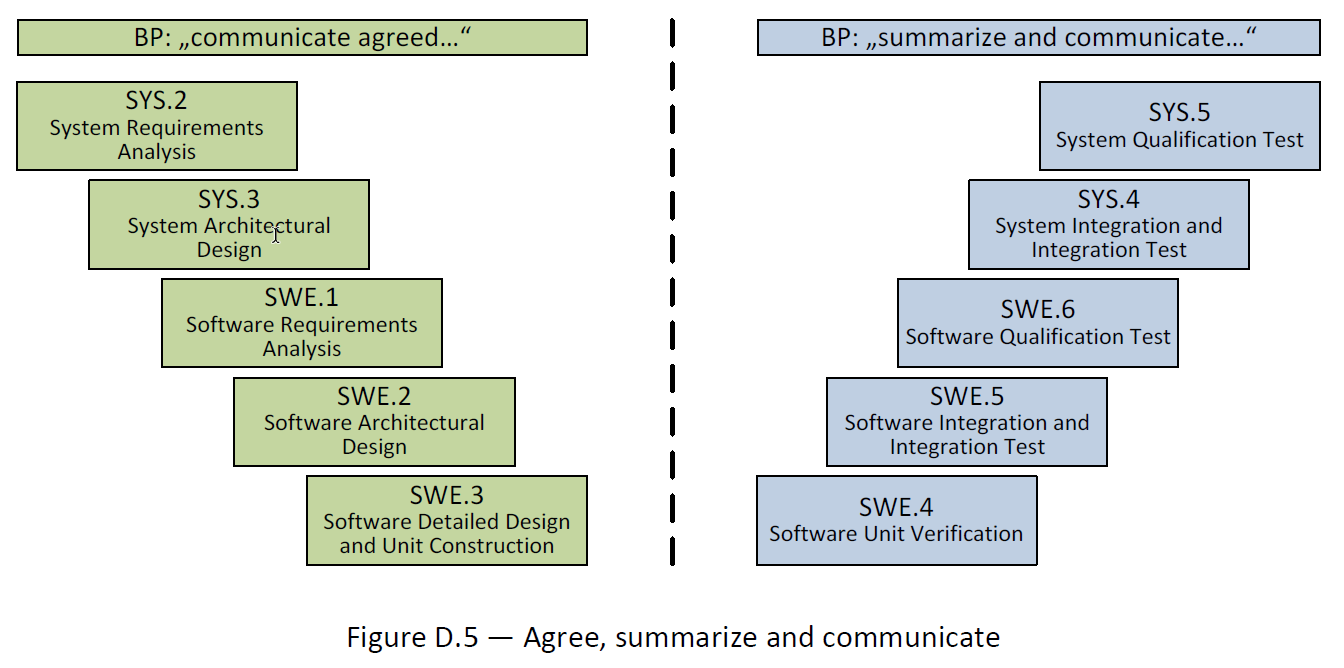

Figure D.5 — Agree, summarize and communicate …………………………………………………………. 125

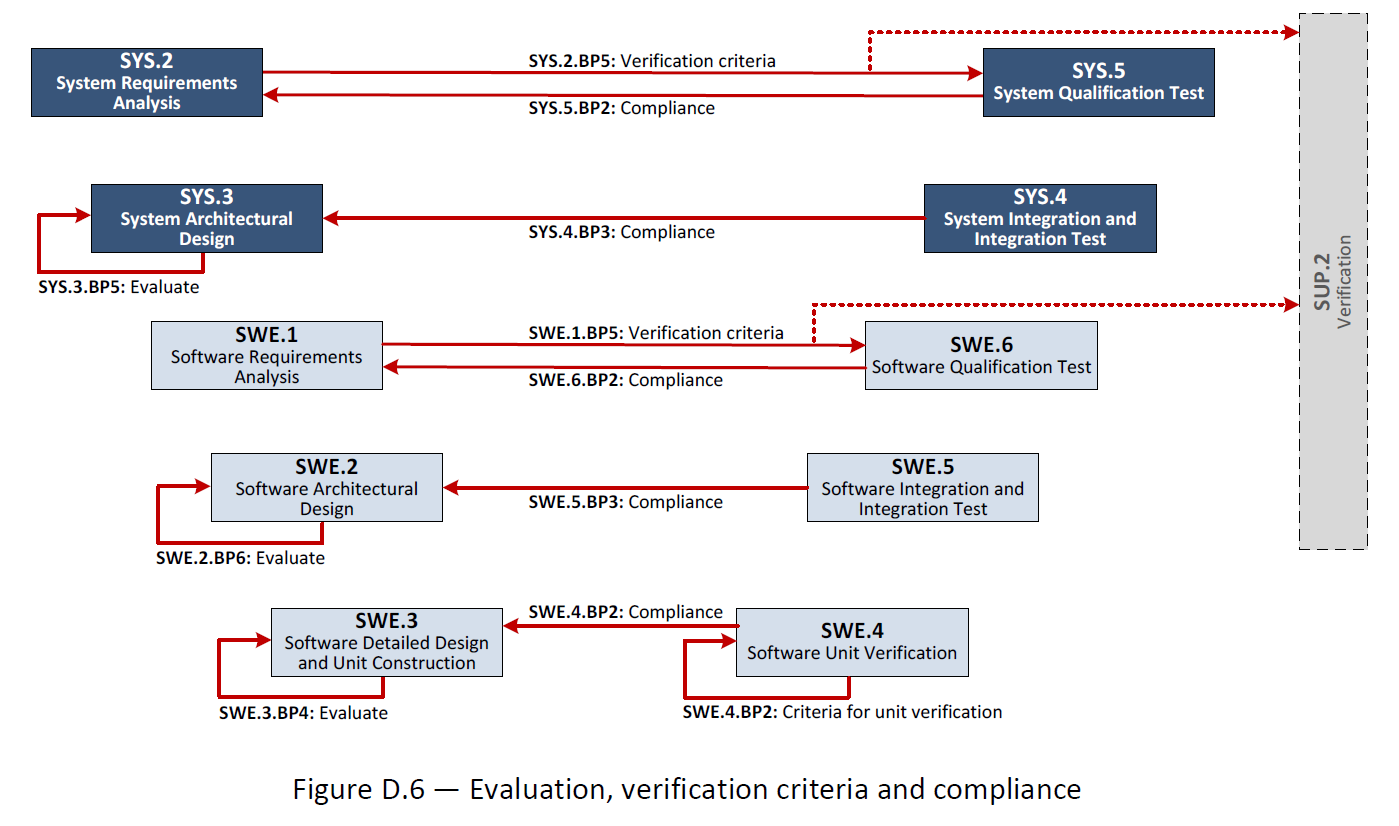

Figure D.6 — Evaluation, verification criteria and compliance …………………………………………… 126

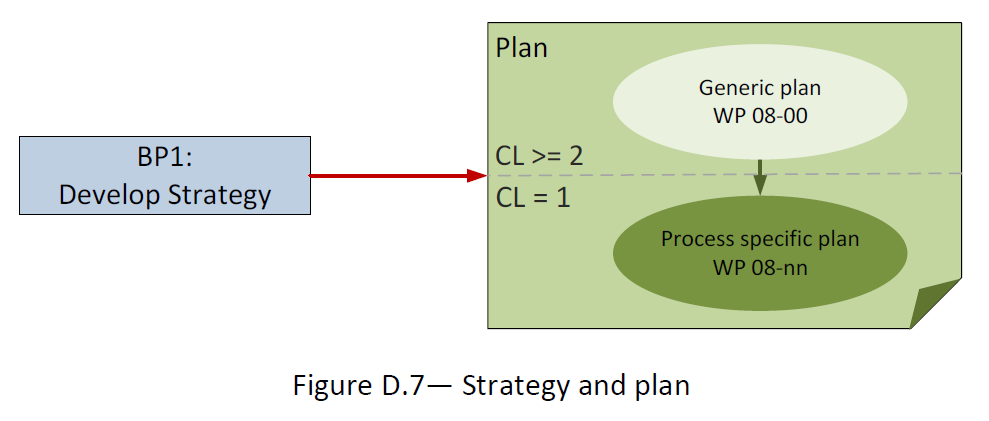

Figure D.7 — Strategy and plan ………………………………………………………………………. 127

List of Tables

Table 1 — Abbreviation List …………………………………………………………………………. 9

Table 2 — Primary life cycle processes – ACQ process group ……………………………………………… 13

Table 3 — Primary life cycle processes – SPL process group ……………………………………………… 13

Table 4 — Primary life cycle processes – SYS process group ……………………………………………… 13

Table 5 — Primary life cycle processes – SWE process group ……………………………………………… 14

Table 6 — Supporting life cycle processes - SUP process group …………………………………………… 14

Table 7 — Organizational life cycle processes - MAN process group ……………………………………….. 14

Table 8 — Organizational life cycle processes - PIM process group ……………………………………….. 15

Table 9 — Organizational life cycle processes - REU process group ……………………………………….. 15

Table 10 — Process capability levels according to ISO/IEC 33020 …………………………………………. 16

Table 11 — Process attributes according to ISO/IEC 33020 ……………………………………………….. 16

Table 12 — Rating scale according to ISO/IEC 33020 …………………………………………………….. 17

Table 13 — Rating scale percentage values according to ISO/IEC 33020 …………………………………….. 17

Table 14 — Refinement of rating scale according to ISO/IEC 33020 ………………………………………… 17

Table 15 — Refined rating scale percentage values according to ISO/IEC 33020 ……………………………… 18

Table 16 — Process capability level model according to ISO/IEC 33020 …………………………………….. 20

Table 17 — Template for the process description ……………………………………………………….. 24

Table B.1 — Structure of WPC tables ………………………………………………………………….. 94

Table B.2 — Work product characteristics ……………………………………………………………… 94

Table C.1 — Terminology …………………………………………………………………………….. 119

Table E.1 — Reference standards ……………………………………………………………………… 128

1. Introduction

1.1. Scope

Process assessment is a disciplined evaluation of an organizational unit’s processes against a process assessment model.

The Automotive SPICE process assessment model (PAM) is intended for use when performing conformant assessments of the process capability on the development of embedded automotive systems. It was developed in accordance with the requirements of ISO/IEC 33004.

Automotive SPICE has its own process reference model (PRM), which was developed based on the Automotive SPICE process reference model 4.5. It was further developed and tailored considering the specific needs of the automotive industry. If processes beyond the scope of Automotive SPICE are needed, appropriate processes from other process reference models such as ISO/IEC 12207 or ISO/IEC 15288 may be added based on the business needs of the organization.

The PRM is incorporated in this document and is used in conjunction with the Automotive SPICE process assessment model when performing an assessment.

This Automotive SPICE process assessment model contains a set of indicators to be considered when interpreting the intent of the Automotive SPICE process reference model. These indicators may also be used when implementing a process improvement program subsequent to an assessment.

1.2. Terminology

Automotive SPICE follows the following precedence for use of terminology:

ISO/IEC 33001 for assessment related terminology

ISO/IEC/IEEE 24765 and ISO/IEC/IEEE 29119 terminology (as contained in Annex C)

Terms introduced by Automotive SPICE (as contained in Annex C)

1.3. Abbreviations

AS |

Automotive SPICE |

BP |

Base Practice |

CAN |

Controller Area Network |

CASE |

Computer-Aided Software Engineering, |

CCB |

Change Control Board |

CFP |

Call For Proposals |

CPU |

Central Processing Unit |

ECU |

Electronic Control Unit |

EEPROM |

Electrically Erasable Programmable Read-Only Memory |

GP |

Generic Practice |

GR |

Generic Resource |

IEC |

International Electrotechnical Commission |

IEEE |

Institute of Electrical and Electronics Engineers |

I/O |

Input / Output |

ISO |

International Organization for Standardization |

ITT |

Invitation To Tender |

LIN |

Local Interconnect Network |

MISRA |

Motor Industry Software Reliability Association |

MOST |

Media Oriented Systems Transport |

PA |

Process Attribute |

PAM |

Process Assessment Model |

PRM |

Process Reference Model |

PWM |

Pulse Width Modulation |

RAM |

Random Access Memory |

ROM |

Read Only Memory |

SPICE |

Software Process Improvement and Capability dEtermination |

SUG |

Spice User Group |

USB |

Universal Serial Bus |

WP |

Work Product |

WPC |

Work Product Characteristic |

2. Statement of compliance

The Automotive SPICE process assessment model and process reference model is conformant with the ISO/IEC 33004, and can be used as the basis for conducting an assessment of process capability.

ISO/IEC 33020 is used as an ISO/IEC 33003 compliant Measurement Framework.

A statement of compliance of the process assessment model and process reference model with the requirements of ISO/IEC 33004 is provided in Annex A.

3. Process capability determination

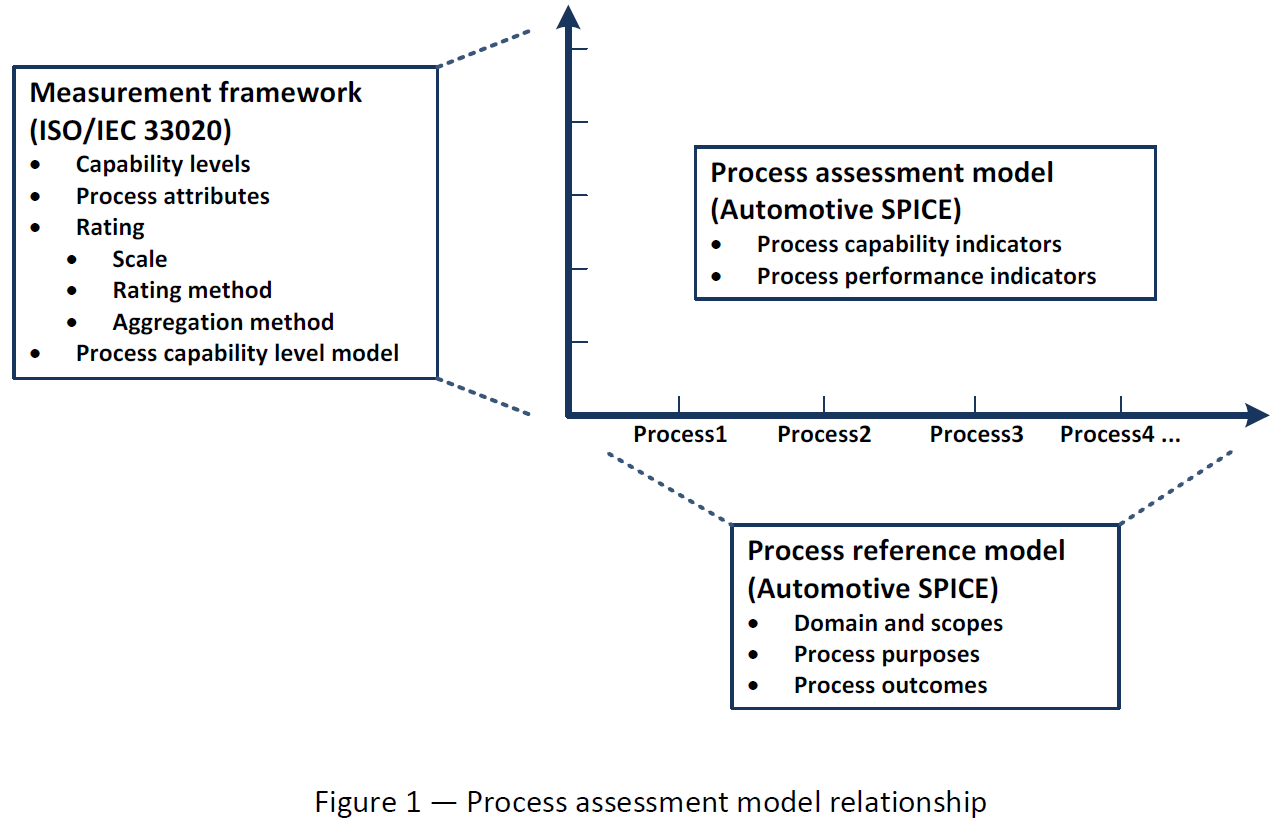

The concept of process capability determination by using a process assessment model is based on a two-dimensional framework. The first dimension is provided by processes defined in a process reference model (process dimension). The second dimension consists of capability levels that are further subdivided into process attributes (capability dimension). The process attributes provide the measurable characteristics of process capability.

The process assessment model selects processes from a process reference model and supplements with indicators. These indicators support the collection of objective evidence which enable an assessor to assign ratings for processes according to the capability dimension.

The relationship is shown in Figure 1:

Fig. 1 Figure 1 — Process assessment model relationship

3.1. Process reference model

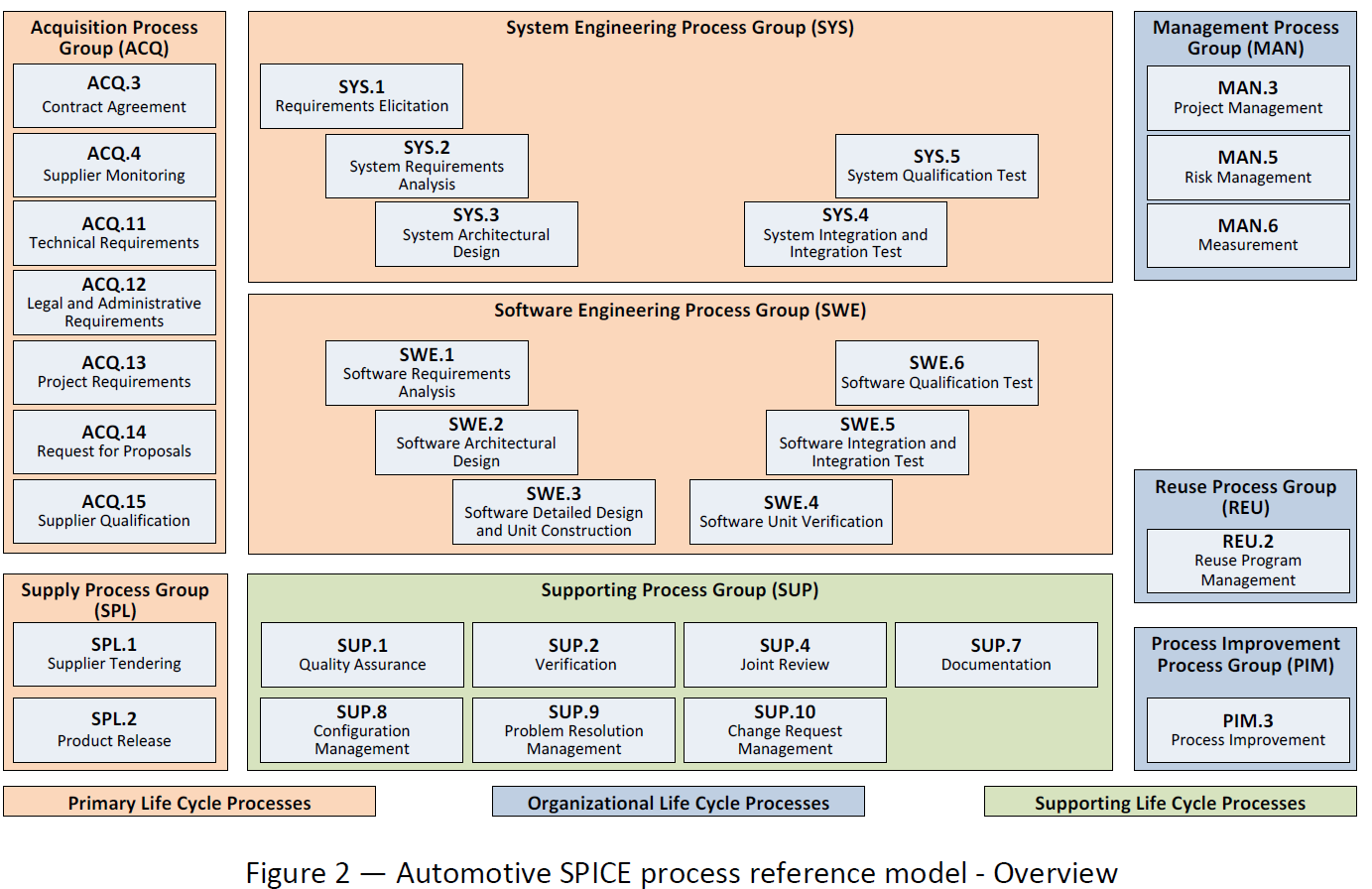

Processes are grouped by process category and at a second level into process groups according to the type of activity they address.

There are 3 process categories: Primary life cycle processes, Organizational life cycle processes and Supporting life cycle processes.

Each process is described in terms of a purpose statement. The purpose statement contains the unique functional objectives of the process when performed in a particular environment. For each purpose statement a list of specific outcomes is associated, as a list of expected positive results of the process performance.

For the process dimension, the Automotive SPICE process reference model provides the set of processes shown in Figure 2.

Fig. 2 Figure 2 — Automotive SPICE process reference model - Overview

3.1.1. Primary life cycle processes category

The primary life cycle processes category consists of processes that may be used by the customer when acquiring products from a supplier, and by the supplier when responding and delivering products to the customer including the engineering processes needed for specification, design, development, integration and testing.

The primary life cycle processes category consists of the following groups:

the Acquisition process group;

the Supply process group;

the System engineering process group;

the Software engineering process group.

The Acquisition process group (ACQ) consists of processes that are performed by the customer, or by the supplier when acting as a customer for its own suppliers, in order to acquire a product and/or service.

ACQ.3 |

Contract Agreement |

ACQ.4 |

Supplier Monitoring |

ACQ.11 |

Technical Requirements |

ACQ.12 |

Legal and Administrative Requirements |

ACQ.13 |

Project Requirements |

ACQ.14 |

Request for Proposals |

ACQ.15 |

Supplier Qualification |

The Supply process group (SPL) consists of processes performed by the supplier in order to supply a product and/or a service.

SPL.1 |

Supplier Tendering |

SPL.2 |

Product Release |

The System Engineering process group (SYS) consists of processes addressing the elicitation and management of customer and internal requirements, the definition of the system architecture and the integration and testing on the system level.

SYS.1 |

Requirements Elicitation |

SYS.2 |

System Requirements Analysis |

SYS.3 |

System Architectural Design |

SYS.4 |

System Integration and Integration Test |

SYS.5 |

System Qualification Test |

The Software Engineering process group (SWE) consists of processes addressing the management of software requirements derived from the system requirements, the development of the corresponding software architecture and design as well as the implementation, integration and testing of the software.

SWE.1 |

Software Requirements Analysis |

SWE.2 |

Software Architectural Design |

SWE.3 |

Software Detailed Design and Unit Construction |

SWE.4 |

Software Unit Verification |

SWE.5 |

Software Integration and Integration Test |

SWE.6 |

Software Qualification Test |

3.1.2. Supporting life cycle processes category

The supporting life cycle processes category consists of processes that may be employed by any of the other processes at various points in the life cycle.

SUP.1 |

Quality Assurance |

SUP.2 |

Verification |

SUP.4 |

Joint Review |

SUP.7 |

Documentation |

SUP.8 |

Configuration Management |

SUP.9 |

Problem Resolution Management |

SUP.10 |

Change Request Management |

3.1.3. Organizational life cycle processes category

The organizational life cycle processes category consists of processes that develop process, product, and resource assets which, when used by projects in the organization, will help the organization achieve its business goals.

The organizational life cycle processes category consists of the following groups:

the Management process group;

the Process Improvement process group;

the Reuse process group.

The Management process group (MAN) consists of processes that may be used by anyone who manages any type of project or process within the life cycle.

MAN.3 |

Project Management |

MAN.5 |

Risk Management |

MAN.6 |

Measurement |

The Process Improvement process group (PIM) covers one process that contains practices to improve the processes performed in the organizational unit.

PIM.3 |

Process Improvement |

The Reuse process group (REU) covers one process to systematically exploit reuse opportunities in organization’s reuse programs.

REU.2 |

Reuse Program Management |

3.2. Measurement framework

The measurement framework provides the necessary requirements and rules for the capability dimension. It defines a schema which enables an assessor to determine the capability level of a given process. These capability levels are defined as part of the measurement framework.

To enable the rating, the measurement framework provides process attributes defining a measurable property of process capability. Each process attribute is assigned to a specific capability level. The extent of achievement of a certain process attribute is represented by means of a rating based on a defined rating scale. The rules from which an assessor can derive a final capability level for a given process are represented by a process capability level model.

Automotive SPICE 3.1 uses the measurement framework defined in ISO/IEC 33020:2015.

Note

NOTE: Text incorporated from ISO/IEC 33020 within this chapter is written in italic font and marked with a left side bar.

3.2.1. Process capability levels and process attributes

The process capability levels and process attributes are identical to those defined in ISO/IEC 33020 clause 5.2. The detailed descriptions of the capability levels and the corresponding process attributes can be found in chapter 5.

Process attributes are features of a process that can be evaluated on a scale of achievement, providing a measure of the capability of the process. They are applicable to all processes.

A capability level is a set of process attribute(s) that work together to provide a major enhancement in the capability to perform a process. Each attribute addresses a specific aspect of the capability level. The levels constitute a rational way of progressing through improvement of the capability of any process.

According to ISO/IEC 33020 there are six capability levels, incorporating nine process attributes:

Level 0: Incomplete process |

The process is not implemented, or fails to achieve its process purpose. |

Level 1: Performed process |

The implemented process achieves its process purpose |

Level 2: Managed process |

The previously described performed process is now implemented in a managed fashion (planned, monitored and adjusted) and its work products are appropriately established, controlled and maintained. |

Level 3: Established process |

The previously described managed process is now implemented using a defined process that is capable of achieving its process outcomes. |

Level 4: Predictable process |

The previously described established process now operates predictively within defined limits to achieve its process outcomes. Quantitative management needs are identified, measurement data are collected and analyzed to identify assignable causes of variation. Corrective action is taken to address assignable causes of variation. |

Level 5: Innovating process |

The previously described predictable process is now continually improved to respond to organizational change. |

Within this process assessment model, the determination of capability is based upon the nine process attributes (PA) defined in ISO/IEC 33020 and listed in Table 11.

Attribute ID |

Process Attributes |

|---|---|

Level 0: Incomplete process |

|

Level 1: Performed process |

|

PA 1.1 |

Process performance process attribute |

Level 2: Managed process |

|

PA 2.1 |

Performance management process attribute |

PA 2.2 |

Work product management process attribute |

Level 3: Established process |

|

PA 3.1 |

Process definition process attribute |

PA 3.2 |

Process deployment process attribute |

Level 4: Predictable process |

|

PA 4.1 |

Quantitative analysis process attribute |

PA 4.2 |

Quantitative control process attribute |

Level 5: Innovating process |

|

PA 5.1 |

Process innovation process attribute |

PA 5.2 |

Process innovation implementation process attribute |

3.2.2. Process attribute rating

To support the rating of process attributes, the ISO/IEC 33020 measurement framework provides a defined rating scale with an option for refinement, different rating methods and different aggregation methods depending on the class of the assessment (e.g. required for organizational maturity assessments).

Rating scale

Within this process measurement framework, a process attribute is a measureable property of process capability. A process attribute rating is a judgement of the degree of achievement of the process attribute for the assessed process.

The rating scale is defined by ISO/IEC 33020 as shown in table 12.

N |

Not achieved |

There is little or no evidence of achievement of the defined process attribute in the assessed process. |

P |

Partially achieved |

There is some evidence of an approach to, and some achievement of, the defined process attribute in the assessed process. Some aspects of achievement of the process attribute may be unpredictable. |

L |

Largely achieved |

There is evidence of a systematic approach to, and significant achievement of, the defined process attribute in the assessed process. Some weaknesses related to this process attribute may exist in the assessed process. |

F |

Fully achieved |

There is evidence of a complete and systematic approach to, and full achievement of, the defined process attribute in the assessed process. No significant weaknesses related to this process attribute exist in the assessed process. |

The ordinal scale defined above shall be understood in terms of percentage achievement of a process attribute.

The corresponding percentages shall be:

N |

Not achieved |

0 to ≤ 15% achievement |

P |

Partially achieved |

> 15% to ≤ 50% achievement |

L |

Largely achieved |

> 50% to ≤ 85% achievement |

F |

Fully achieved |

> 85% to ≤ 100% achievement |

The ordinal scale may be further refined for the measures P and L as defined below.

P- |

Partially achieved: |

There is some evidence of an approach to, and some achievement of, the defined process attribute in the assessed process. Many aspects of achievement of the process attribute may be unpredictable. |

P+ |

Partially achieved: |

There is some evidence of an approach to, and some achievement of, the defined process attribute in the assessed process. Some aspects of achievement of the process attribute may be unpredictable. |

L- |

Largely achieved: |

There is evidence of a systematic approach to, and significant achievement of, the defined process attribute in the assessed process. Many weaknesses related to this process attribute may exist in the assessed process. |

L+ |

Largely achieved: |

There is evidence of a systematic approach to, and significant achievement of, the defined process attribute in the assessed process. Some weaknesses related to this process attribute may exist in the assessed process. |

The corresponding percentages shall be:

P- |

Partially achieved - |

> 15% to ≤ 32.5% achievement |

P+ |

Partially achieved + |

> 32.5 to ≤ 50% achievement |

L- |

Largely achieved - |

> 50% to ≤ 67.5% achievement |

L+ |

Largely achieved + |

> 67.5% to ≤ 85% achievement |

Rating and aggregation method

ISO/IEC 33020 provides the following definitions:

A process outcome is the observable result of successful achievement of the process purpose.

A process attribute outcome is the observable result of achievement of a specified process attribute.

Process outcomes and process attribute outcomes may be characterised as an intermediate step to providing a process attribute rating.

When performing rating, the rating method employed shall be specified relevant to the class of assessment. The following rating methods are defined.

The use of rating method may vary according to the class, scope and context of an assessment. The lead assessor shall decide which (if any) rating method to use. The selected rating method(s) shall be specified in the assessment input and referenced in the assessment report.

ISO/IEC 33020 provides the following 3 rating methods:

Rating method R1

The approach to process attribute rating shall satisfy the following conditions:

Each process outcome of each process within the scope of the assessment shall be characterized for each process instance, based on validated data;

Each process attribute outcome of each process attribute for each process within the scope of the assessment shall be characterised for each process instance, based on validated data;

Process outcome characterisations for all assessed process instances shall be aggregated to provide a process performance attribute achievement rating;

Process attribute outcome characterisations for all assessed process instances shall be aggregated to provide a process attribute achievement rating.

Rating method R2

The approach to process attribute rating shall satisfy the following conditions:

Each process attribute for each process within the scope of the assessment shall be characterized for each process instance, based on validated data;

Process attribute characterisations for all assessed process instances shall be aggregated to provide a process attribute achievement rating.

Rating method R3

Process attribute rating across assessed process instances shall be made without aggregation.

In principle the three rating methods defined in ISO/IEC 33020 depend on

whether the rating is made only on process attribute level (Rating method 3 and 2) or – with more level of detail – both on process attribute and process attribute outcome level (Rating method 1); and

the type of aggregation ratings across the assessed process instances for each process

If a rating is performed for both process attributes and process attribute outcomes (Rating method 1), the result will be a process performance attribute outcome rating on level 1 and a process attribute achievement rating on higher levels.

Depending on the class, scope and context of the assessment an aggregation within one process (one-dimensional, vertical aggregation), across multiple process instances (one-dimensional, horizontal aggregation) or both (two-dimensional, matrix aggregation) is performed.

ISO/IEC 33020 provides the following examples:

When performing an assessment, ratings may be summarised across one or two dimensions.

For example, when rating a

process attribute for a given process, one may aggregate ratings of the associated process (attribute) outcomes – such an aggregation will be performed as a vertical aggregation (one dimension).

process (attribute) outcome for a given process attribute across multiple process instances, one may aggregate the ratings of the associated process instances for the given process (attribute) outcome such an aggregation will be performed as a horizontal aggregation (one dimension)

process attribute for a given process, one may aggregate the ratings of all the process (attribute) outcomes for all the processes instances – such an aggregation will be performed as a matrix aggregation across the full scope of ratings (two dimensions)

The standard defines different methods for aggregation. Further information can be taken from ISO/IEC 33020.

3.2.3. Process capability level model

The process capability level achieved by a process shall be derived from the process attribute ratings for that process according to the process capability level model defined in Table 16.

The process capability level model defines the rules how the achievement of each level depends on the rating of the process attributes for the assessed and all lower levels.

As a general rule the achievement of a given level requires a largely achievement of the corresponding process attributes and a full achievement of any lower lying process attribute.

Scale |

Process attribute |

Rating |

|---|---|---|

Level 1 |

PA 1.1: Process Performance |

Largely |

Level 2 |

PA 1.1: Process Performance PA 2.1: Performance Management PA 2.2: Work Product Management |

Fully Largely Largely |

Level 3 |

PA 1.1: Process Performance PA 2.1: Performance Management PA 2.2: Work Product Management PA 3.1: Process Definition PA 3.2: Process Deployment |

Fully Fully Fully Largely Largely |

Level 4 |

PA 1.1: Process Performance PA 2.1: Performance Management PA 2.2: Work Product Management PA 3.1: Process Definition PA 3.2: Process Deployment PA 4.1: Quantitative Analysis PA 4.2: Quantitative Control |

Fully Fully Fully Fully Fully Largely Largely |

Level 5 |

PA 1.1: Process Performance PA 2.1: Performance Management PA 2.2: Work Product Management PA 3.1: Process Definition PA 3.2: Process Deployment PA 4.1: Quantitative Analysis PA 4.2: Quantitative Control PA 5.1: Process Innovation PA 5.2: Process Innovation Implementation |

Fully Fully Fully Fully Fully Fully Fully Largely Largely |

3.3. Process assessment model

The process assessment model offers indicators in order to identify whether the process outcomes and the process attribute outcomes (achievements) are present or absent in the instantiated processes of projects and organizational units. These indicators provide guidance for assessors in accumulating the necessary objective evidence to support judgments of capability. They are not intended to be regarded as a mandatory set of checklists to be followed.

In order to judge the presence or absence of process outcomes and process achievements an assessment obtains objective evidence. All such evidence comes from the examination of work products and repository content of the assessed processes, and from testimony provided by the performers and managers of the assessed processes. This evidence is mapped to the PAM indicators to allow establishing the correspondence to the relevant process outcomes and process attribute achievements.

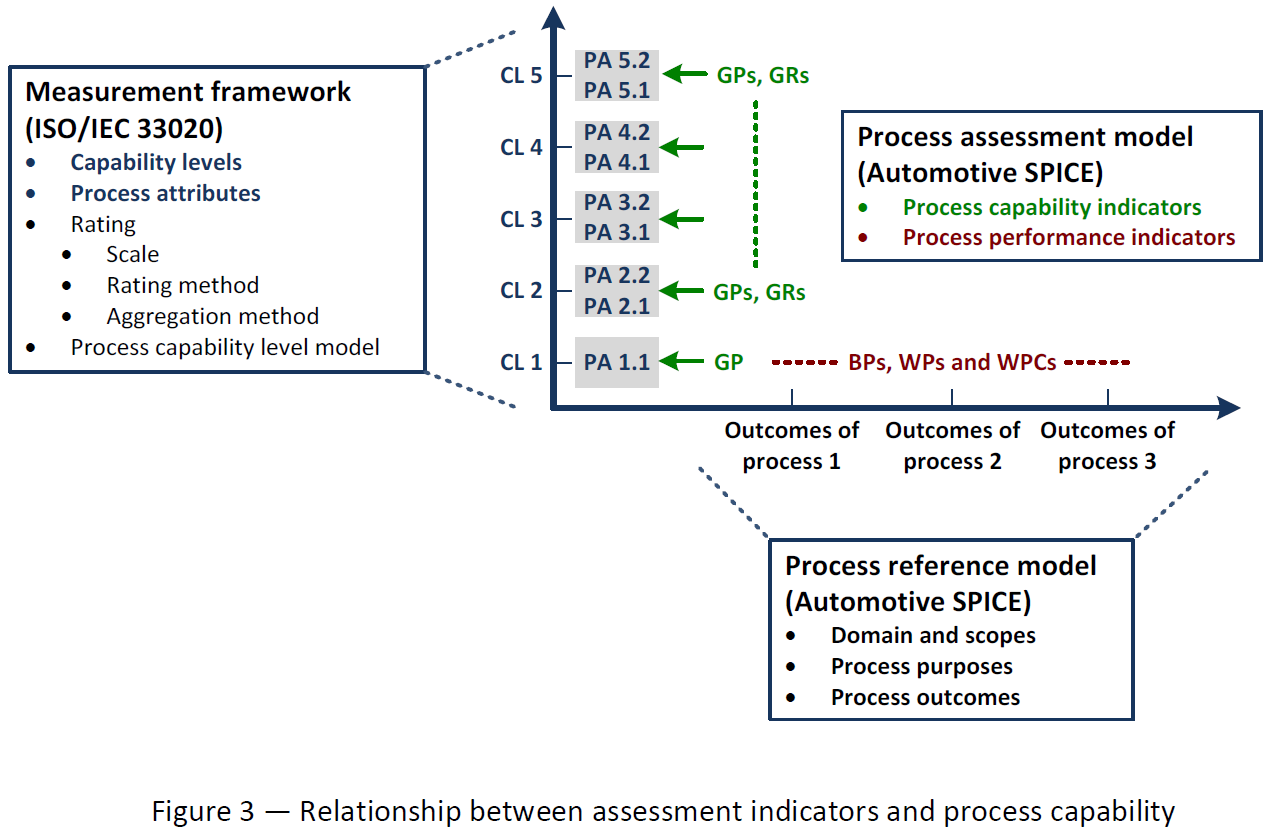

There are two types of indicators:

Process performance indicators, which apply exclusively to capability Level 1. They provide an indication of the extent of fulfillment of the process outcomes

Process capability indicators, which apply to Capability Levels 2 to 5. They provide an indication of the extent of fulfillment of the process attribute achievements.

Assessment indicators are used to confirm that certain practices were performed, as shown by evidence collected during an assessment. All such evidence comes either from the examination of work products of the processes assessed, or from statements made by the performers and managers of the processes. The existence of base practices and work products provide evidence of the performance of the processes associated with them. Similarly, the existence of process capability indicators provides evidence of process capability.

The evidence obtained should be recorded in a form that clearly relates to an associated indicator, in order that support for the assessor’s judgment can be confirmed or verified as required by ISO/IEC 33002.

3.3.1. Process performance indicators

Types of process performance indicators are

Base practices (BP)

Work products (WP).

Both BPs and WPs relate to one or more process outcomes. Consequently, BPs and WPs are always process-specific and not generic. BPs represent activity-oriented indicators. WPs represent resultoriented indicators. Both BP and WP are used for judging objective evidence that an assessor is to collect, and accumulate, in the performance of an assessment. In that respect BPs and WPs are alternative indicator sets the assessor can use.

The PAM offers a set of work product characteristics (WPC, see Annex B) for each WP. These are meant to offer a good practice and state-of-the-art knowledge guide for the assessor. Therefore, WP and WPC are supposed to be a quickly accessible information source during an assessment. In that respect WPs and WPCs represent an example structure only. They are neither a “strict must” nor are they normative for organizations. Instead, the actual structure, form and content of concrete work products and documents for the implemented processes must be defined by the project and organization, respectively. The project and/or organization ensures that the work products are appropriate for the intended purpose and needs, and in relation to the development goals.

3.3.2. Process capability indicators

Types of process capability indicators are:

Generic Practice (GP)

Generic Resource (GR)

Both GPs and GRs relate to one or more PA Achievements. In contrast to process performance indicators, however, they are of generic type, i.e. they apply to any process.

The difference between GP and GR is that the former represent activity-oriented indicators while the latter represent infrastructure- oriented indicators for judging objective evidence. An assessor has to collect and accumulate evidence supporting process capability indicators during an assessment. In that respect GPs and GRs are alternative indicators sets the assessor can use.

In spite of the fact that level 1 capability of a process is only characterized by the measure of the extent to which the process outcomes are achieved the measurement framework (see chapter 3.2) requires each level to reveal a process attribute, and, thus, requires the PAM to introduce at least one process capability indicator. Therefore, the only process performance attribute for capability Level 1 (PA.1.1) has a single generic practice (GP 1.1.1) pointing as an editorial reference to the respective process performance indicators (see Figure 3).

Fig. 3 Figure 3 — Relationship between assessment indicators and process capability

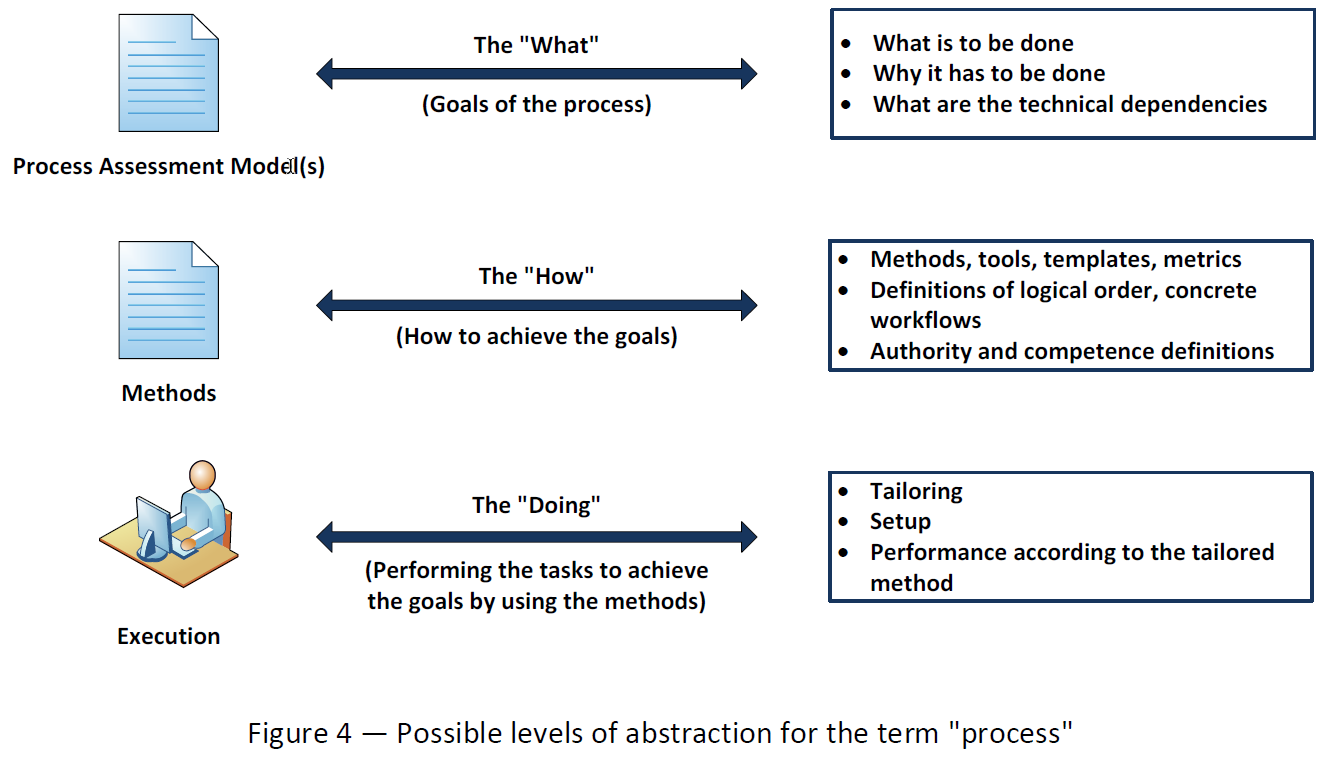

3.3.3. Understanding the level of abstraction of a PAM

The term “process” can be understood at three levels of abstraction. Note that these levels of abstraction are not meant to define a strict black-or-white split, nor is it the aim to provide a scientific classification schema – the message here is to understand that, in practice, when it comes to the term “process” there are different abstraction levels, and that a PAM resides at the highest.

Fig. 4 Figure 4 — Possible levels of abstraction for the term “process”

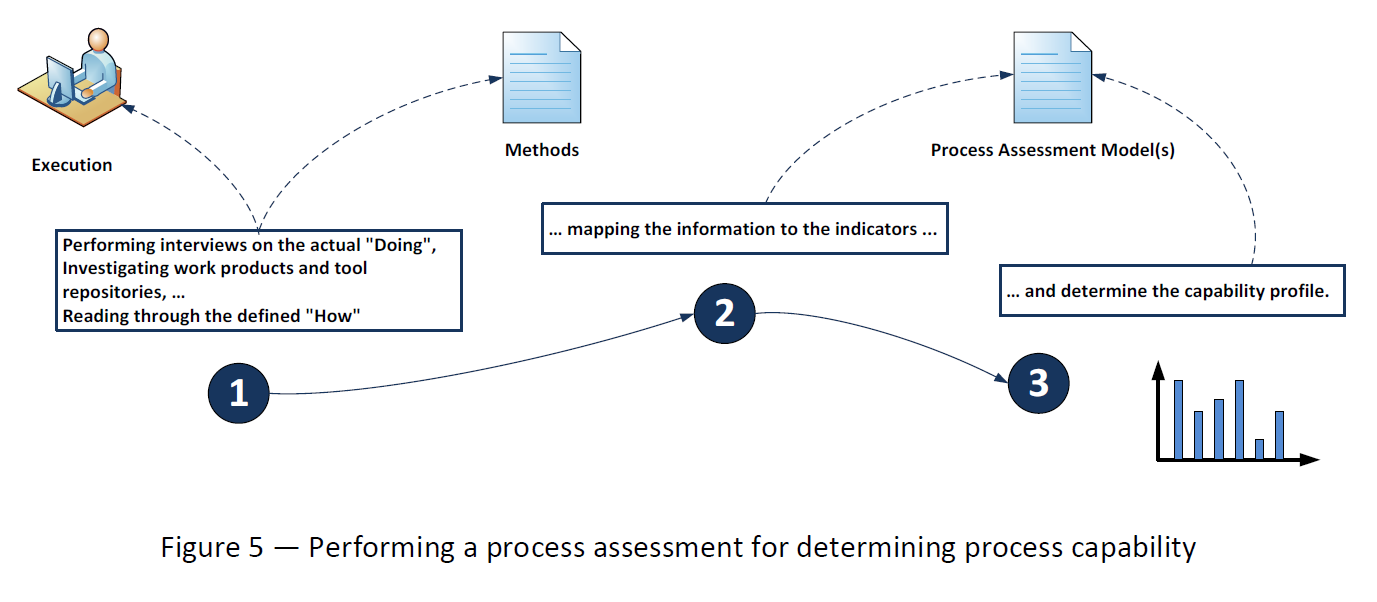

Capturing experience acquired during product development (i.e. at the DOING level) in order to share this experience with others means creating a HOW level. However, a HOW is always specific to a particular context such as a company, an organizational unit, or a product line. For example, the HOW of a project, organizational unit, or company A is potentially not applicable as is to a project, organizational unit, or company B. However, both might be expected to adhere the principles represented by PAM indicators for process outcomes and process attribute achievements. These indicators are at the WHAT level while deciding on solutions for concrete templates, proceedings, and tooling etc. is left to the HOW level.

Fig. 5 Figure 5 — Performing a process assessment for determining process capability

4. Process reference model and performance indicators (Level 1)

The processes in the process dimension can be drawn from the Automotive SPICE process reference model, which is incorporated in the tables below indicated by a red bar at the left side.

Each table related to one process in the process dimension contains the process reference model (indicated by a red bar) and the process performance indicators necessary to define the process assessment model. The process performance indicators consist of base practices (indicated by a green bar) and output work products (indicated by a blue bar).

Process reference model |

Process ID Process name Process purpose Process outcomes |

The individual processes are described in terms of process name, process purpose, and process outcomes to define the Automotive SPICE process reference model. Additionally a process identifier is provided. |

Process performance indicators |

Base practices |

A set of base practices for the process providing a definition of the tasks and activities needed to accomplish the process purpose and fulfill the process outcomes |

Output work products |

A number of output work products associated with each process

|

4.1. Acquisition process group (ACQ)

4.1.1. ACQ.3 Contract Agreement

Process ID ACQ.3 Process name Contract Agreement Process purpose The purpose of Contract Agreement Process is to negotiate and approve a contract/agreement with the supplier. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: Relevant aspects of the procurement may include

Output work products

|

4.1.2. ACQ.4 Supplier Monitoring

Process ID ACQ.4 Process name Supplier Monitoring Process purpose The purpose of the Supplier Monitoring Process is to track and assess the performance of the supplier against agreed requirements. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE1: Joint processes and interfaces usually include project management, requirements management, change management, configuration management, problem resolution, quality assurance and customer acceptance. NOTE 2: Joint activities to be performed should be mutually agreed between the customer and the supplier. NOTE 3: The term customer in this process refers to the assessed party. The term supplier refers to the supplier of the assessed party.

NOTE 4: Agreed information should include all relevant work products.

Output work products

|

4.1.3. ACQ.11 Technical Requirements

Process ID ACQ.11 Process name Technical Requirements Process purpose The purpose of the Technical Requirements Process is to establish the technical requirements of the acquisition. This involves the elicitation of functional and non-functional requirements that consider the deployment life cycle of the products so as to establish a technical requirement baseline. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: This may include

NOTE 2: To ensure a better understanding:

NOTE 3: This may include analyzing, structuring and prioritizing technical requirements according to their importance to the business.

Output work products

|

4.1.4. ACQ.12 Legal and Administrative Requirements

Process ID ACQ.12 Process name Legal and Administrative Requirements Process purpose The purpose of the Legal and Administrative Requirements Process is to define the awarding aspects – expectations, liabilities, legal and other issues and which comply with national and international laws of contract. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: This may include

NOTE 2: This may include planning of the control of contract changes.

Output work products

|

4.1.5. ACQ.13 Project Requirements

Process ID ACQ.13 Process name Project Requirements Process purpose The purpose of the Project Requirements Process is to specify the requirements to ensure the acquisition project is performed with adequate planning, staffing, directing, organizing and control over project tasks and activities. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: Requirements for the organizational aspects refer to the organization of the people on the project e.g. who is responsible etc. at different levels.

NOTE 2: Requirements for the management, controlling and reporting aspects of the project may be

NOTE 3: Techniques for supporting the exchange of information may include electronic solutions, face-to-face interactions and decisions about the frequency.

NOTE 4: This may include for example the decision to link the major proportion of the supplier’s payment to successful completion of the acceptance test, the definition of supplier performance criteria and ways to measure, test and link them to the payment schedule or the decision that payments be made on agreed results.

NOTE 5: Potential risk areas are for example stakeholder (customer, user, and sponsor), product (uncertainty, complexity), processes (acquisition, management, support, and organization), resources (human, financial, time, infrastructure), context (corporate context, project context, regulatory context, location) or supplier (process maturity, resources, experience).

NOTE 6: This may include for example who has the lead on which type of interaction, who maintains an open-issue-list, who are the contact persons for management, technical and contractual issues, the frequency and type of interaction, to whom the relevant information is distributed.

NOTE 7: This may include unrestricted right of product use or delivery of source code trial installation for “sale or return”. NOTE 8: This may include for example training requirements, the decision if support and maintenance should be conducted in-house or by a third party or the establishment of service level agreements. Output work products

|

4.1.6. ACQ.14 Request for Proposals

Process ID ACQ.14 Process name Request for Proposals Process purpose The purpose of the Request for Proposals Process is to prepare and issue the necessary acquisition requirements. The documentation will include, but not be limited to, the contract, project, finance and technical requirements to be provided for use in the Call For Proposals (CFP) / Invitation To Tender (ITT). Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: Examples are:

NOTE 2: The goal is to provide the supplier with an in-depth understanding of your business to enable him to offer the specified solution.

NOTE 3: The overall purpose of this is to communicate the documented business requirements of the acquisition to the suppliers.

Output work products

|

4.1.7. ACQ.15 Supplier Qualification

Process ID ACQ.15 Process name Supplier Qualification Process purpose The purpose of the Supplier Qualification Process is to evaluate and determine if the potential supplier(s) have the required qualification for entering the proposal/tender evaluation process. In this process, the technical background, quality system, servicing, user support capabilities etc. will be evaluated. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: This could include

NOTE 2: It is often required that the supplier should have an ISO 9001 and/or an ISO 16949 certificate. NOTE 3: Establish the specific target levels against which the supplier’s capability will be measured.

NOTE 4: This may include developing a method for evaluating risk related to the supplier or the proposed solution.

Output work products

|

4.2. Supply process group (SPL)

4.2.1. SPL.1 Supplier Tendering

Process ID SPL.1 Process name Supplier Tendering Process purpose The purpose of the Supplier Tendering Process is to establish an interface to respond to customer inquiries and requests for proposal, prepare and submit proposals, and confirm assignments through the establishment of a relevant agreement/contract. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE.1: The nature of the commitment should be agreed and evidenced in writing. Only authorized signatories should be able to commit to a contract. Output work products

|

4.2.2. SPL.2 Product Release

Process ID SPL.2 Process name Product Release Process purpose The purpose of the Product Release Process is to control the release of a product to the intended customer. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: The plan should point out which application parameters influencing the identified functionality are effective for which release.

NOTE 2: The release products may include programming tools where these are stated. In automotive terms a release may be associated with a sample e.g. A, B, C.

NOTE 3: A release numbering implementation may include

NOTE 4: A specified and consistent build environment should be used by all parties.

NOTE 5: Where relevant the software release should be programmed onto the correct hardware revision before release.

NOTE 6: The media type for delivery may be intermediate (placed on an adequate media and delivered to customer), or direct (such as delivered in firmware as part of the package) or a mix of both. The release may be delivered electronically by placement on a server. The release may also need to be duplicated before delivery.

NOTE 7: The packaging for certain types of media may need physical or electronic protection for instance specific encryption techniques.

NOTE 8: The release note may include an introduction, the environmental requirements, installation procedures, product invocation, new feature identification and a list of defect resolutions, known defects and workarounds.

NOTE 9: Confirmation of receipt may be achieved by hand, electronically, by post, by telephone or through a distribution service provider. NOTE 10: These practices are typically supported by the SUP.8 Configuration Management Process. Output work products

|

4.3. System engineering process group (SYS)

4.3.1. SYS.1 Requirements Elicitation

Process ID SYS.1 Process name Requirements Elicitation Process purpose The purpose of the Requirements Elicitation Process is to gather, process, and track evolving stakeholder needs and requirements throughout the lifecycle of the product and/or service so as to establish a requirements baseline that serves as the basis for defining the needed work products. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: Requirements elicitation may involve the customer and the supplier. NOTE 2: The agreed stakeholder requirements and evaluation of any change may be based on feasibility studies and/or cost and time analyzes. NOTE 3: The information needed to keep traceability for each customer requirement has to be gathered and documented.

NOTE 4: Reviewing the requirements and requests with the customer supports a better understanding of customer needs and expectations. Refer to the process SUP.4 Joint Review.

NOTE 5: Requirements change may arise from different sources as for instance changing technology and stakeholder needs, legal constraints. NOTE 6: An information management system may be needed to manage, store and reference any information gained and needed in defining agreed stakeholder requirements.

NOTE 7: Any changes should be communicated to the customer before implementation in order that the impact, in terms of time, cost and functionality can be evaluated. NOTE 8: This may include joint meetings with the customer or formal communication to review the status for their requirements and requests; Refer to the process SUP.4 Joint Review. NOTE 9: The formats of the information communicated by the supplier may include computer-aided design data and electronic data exchange. Output work products

|

4.3.2. SYS.2 System Requirements Analysis

Process ID SYS.2 Process name System Requirements Analysis Process purpose The purpose of the System Requirements Analysis Process is to transform the defined stakeholder requirements into a set of system requirements that will guide the design of the system. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: Application parameter influencing functions and capabilities are part of the system requirements. NOTE 2: For changes to the stakeholder’s requirements SUP.10 applies.

NOTE 3: Prioritizing typically includes the assignment of functional content to planned releases. Refer to SPL.2.BP1.

NOTE 4: The analysis of impact on cost and schedule supports the adjustment of project estimates. Refer to MAN.3.BP5.

NOTE 5: Verification criteria demonstrate that a requirement can be verified within agreed constraints and is typically used as the input for the development of the system test cases or other verification measures that ensurescompliance with the system requirements. NOTE 6: Verification which cannot be covered by testing is covered by SUP.2.

NOTE 7: Bidirectional traceability supports coverage, consistency and impact analysis.

NOTE 8: Consistency is supported by bidirectional traceability and can be demonstrated by review records.

Output work products

|

4.3.3. SYS.3 System Architectural Design

Process ID SYS.3 Process name System Architectural Design Process purpose The purpose of the System Architectural Design Process is to establish a system architectural design and identify which system requirements are to be allocated to which elements of the system, and to evaluate the system architectural design against defined criteria. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: The development of system architectural design typically includes the decomposition into elements across appropriate hierarchical levels.

NOTE 2: Dynamic behavior is determined by operating modes (e.g. start-up, shutdown, normal mode, calibration, diagnosis, etc.).

NOTE 3: Evaluation criteria may include quality characteristics (modularity, maintainability, expandability, scalability, reliability, security realization and usability) and results of make-buy-reuse analysis.

NOTE 4: Bidirectional traceability covers allocation of system requirements to the elements of the system architectural design. NOTE 5: Bidirectional traceability supports coverage, consistency and impact analysis.

NOTE 6: Consistency is supported by bidirectional traceability and can be demonstrated by review records. NOTE 7: System requirements typically include system architectural requirements. Refer to BP5.

Output work products

|

4.3.4. SYS.4 System Integration and Integration Test

Process ID SYS.4 Process name System Integration and Integration Test Process purpose The purpose of the System Integration and Integration Test Process is to integrate the system items to produce an integrated system consistent with the system architectural design and to ensure that the system items are tested to provide evidence for compliance of the integrated system items with the system architectural design, including the interfaces between system items. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: The interface descriptions between system elements are an input for the system integration test cases. NOTE 2: Compliance to the architectural design means that the specified integration tests are suitable to prove that the interfaces between the system items fulfill the specification given by the system architectural design. NOTE 3: The system integration test cases may focus on

NOTE 4: The system integration test may be supported using simulation of the environment (e.g. Hardware-in-the-Loop simulation, vehicle network simulations, digital mock-up).

NOTE 5: The system integration can be performed step wise integrating system items (e.g. the hardware elements as prototype hardware, peripherals (sensors and actuators), the mechanics and integrated software) to produce a system consistent with the system architectural design.

NOTE 6: See SUP.9 for handling of non-conformances.

NOTE 7: Bidirectional traceability supports coverage, consistency and impact analysis.

NOTE 8: Consistency is supported by bidirectional traceability and can be demonstrated by review records.

NOTE 9: Providing all necessary information from the test case execution in a summary enables other parties to judge the consequences. Output work products

|

4.3.5. SYS.5 System Qualification Test

Process ID SYS.5 Process name System Qualification Test Process purpose The purpose of the System Qualification Test Process is to ensure that the integrated system is tested to provide evidence for compliance with the system requirements and that the system is ready for delivery. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: See SUP.9 for handling of non-conformances.

NOTE 2: Bidirectional traceability supports coverage, consistency and impact analysis.

NOTE 3: Consistency is supported by bidirectional traceability and can be demonstrated by review records.

NOTE 4: Providing all necessary information from the test case execution in a summary enables other parties to judge the consequences. Output work products

|

4.4. Software engineering process group (SWE)

4.4.1. SWE.1 Software Requirements Analysis

Process ID SWE.1 Process name Software Requirements Analysis Process purpose The purpose of the Software Requirements Analysis Process is to transform the software related parts of the system requirements into a set of software requirements. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: Application parameter influencing functions and capabilities are part of the system requirements. NOTE 2: In case of software development only, the system requirements and the system architecture refer to a given operating environment (see also note 5). In that case, stakeholder requirements should be used as the basis for identifying the required functions and capabilities of the software as well as for identifying application parameters influencing software functions and capabilities.

NOTE 3: Prioritizing typically includes the assignment of software content to planned releases. Refer to SPL.2.BP1.

NOTE 4: The analysis of impact on cost and schedule supports the adjustment of project estimates. Refer to MAN.3.BP5.

NOTE 5: The operating environment is defined as the system in which the software executes (e.g. hardware, operating system, etc.).

NOTE 6: Verification criteria demonstrate that a requirement can be verified within agreed constraints and is typically used as the input for the development of the software test cases or other verification measures that should demonstrate compliance with the software requirements. NOTE 7: Verification which cannot be covered by testing is covered by SUP.2.

NOTE 8: Redundancy should be avoided by establishing a combination of these approaches that covers the project and the organizational needs. NOTE 9: Bidirectional traceability supports coverage, consistency and impact analysis.

NOTE 10: Consistency is supported by bidirectional traceability and can be demonstrated by review records. NOTE 11: In case of software development only, the system requirements and system architecture refer to a given operating environment (see also note 2). In that case, consistency and bidirectional traceability have to be ensured between stakeholder requirements and software requirements.

Output work products

|

4.4.2. SWE.2 Software Architectural Design

Process ID SWE.2 Process name Software Architectural Design Process purpose The purpose of the Software Architectural Design Process is to establish an architectural design and to identify which software requirements are to be allocated to which elements of the software, and to evaluate the software architectural design against defined criteria. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: The software is decomposed into elements across appropriate hierarchical levels down to the software components (the lowest level elements of the software architectural design) that are described in the detailed design.

NOTE 2: Dynamic behavior is determined by operating modes (e.g. start-up, shutdown, normal mode, calibration, diagnosis, etc.), processes and process intercommunication, tasks, threads, time slices, interrupts, etc. NOTE 3: During evaluation of the dynamic behavior the target platform and potential loads on the target should be considered.

NOTE 4: Resource consumption is typically determined for resources like Memory (ROM, RAM, external / internal EEPROM or Data Flash), CPU load, etc.

NOTE 5: Evaluation criteria may include quality characteristics (modularity, maintainability, expandability, scalability, reliability, security realization and usability) and results of make-buy-reuse analysis.

NOTE 6: Bidirectional traceability covers allocation of software requirements to the elements of the software architectural design. NOTE 7: Bidirectional traceability supports coverage, consistency and impact analysis.

NOTE 8: Consistency is supported by bidirectional traceability and can be demonstrated by review records.

Output work products

|

4.4.3. SWE.3 Software Detailed Design and Unit Construction

Process ID SWE.3 Process name Software Detailed Design and Unit Construction Process purpose The purpose of the Software Detailed Design and Unit Construction Process is to provide an evaluated detailed design for the software components and to specify and to produce the software units. Process outcomes As a result of successful implementation of this process:

and

Base practices

NOTE 1: Not all software units have dynamic behavior to be described.

NOTE 2: The results of the evaluation can be used as input for software unit verification.

NOTE 3: Redundancy should be avoided by establishing a combination of these approaches that covers the project and the organizational needs. NOTE 4: Bidirectional traceability supports coverage, consistency and impact analysis.

NOTE 5: Consistency is supported by bidirectional traceability and can be demonstrated by review records.

Output work products

|

4.4.4. SWE.4 Software Unit Verification

Process ID SWE.4 Process name Software Unit Verification Process purpose The purpose of the Software Unit Verification Process is to verify software units to provide evidence for compliance of the software units with the software detailed design and with the non-functional software requirements. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: Possible techniques for unit verification include static/dynamic analysis, code reviews, unit testing etc.

NOTE 2: Possible criteria for unit verification include unit test cases, unit test data, static verification, coverage goals and coding standards such as the MISRA rules. NOTE 3: The unit test specification may be implemented e.g. as a script in an automated test bench.

NOTE 4: Static verification may include static analysis, code reviews, checks against coding standards and guidelines, and other techniques. NOTE 5: See SUP.9 for handling of non-conformances.

NOTE 6: See SUP.9 for handling of non-conformances.

NOTE 7: Bidirectional traceability supports coverage, consistency and impact analysis.

NOTE 8: Consistency is supported by bidirectional traceability and can be demonstrated by review records.

NOTE 9: Providing all necessary information from the test case execution in a summary enables other parties to judge the consequences. Output work products

|

4.4.5. SWE.5 Software Integration and Integration Test

Process ID SWE.5 Process name Software Integration and Integration Test Process purpose The purpose of the Software Integration and Integration Test Process is to integrate the software units into larger software items up to a complete integrated software consistent with the software architectural design and to ensure that the software items are tested to provide evidence for compliance of the integrated software items with the software architectural design, including the interfaces between the software units and between the software items. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: Compliance to the architectural design means that the specified integration tests are suitable to prove that the interfaces between the software units and between the software items fulfill the specification given by the software architectural design. NOTE 2: The software integration test cases may focus on

NOTE 4: See SUP.9 for handling of non-conformances. NOTE 5: The software integration test may be supported by using hardware debug interfaces or simulation environments (e.g. Software-in-the-LoopSimulation).

NOTE 6: Bidirectional traceability supports coverage, consistency and impact analysis.

NOTE 7: Consistency is supported by bidirectional traceability and can be demonstrated by review records.

NOTE 8: Providing all necessary information from the test case execution in a summary enables other parties to judge the consequences. Output work products

|

4.4.6. SWE.6 Software Qualification Test

Process ID SWE.6 Process name Software Qualification Test Process purpose The purpose of the Software Qualification Test Process is to ensure that the integrated software is tested to provide evidence for compliance with the software requirements. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: See SUP.9 for handling of non-conformances.

NOTE 2: Bidirectional traceability supports coverage, consistency and impact analysis.

NOTE 3: Consistency is supported by bidirectional traceability and can be demonstrated by review records.

NOTE 4: Providing all necessary information from the test case execution in a summary enables other parties to judge the consequences. Output work products

|

4.5. Supporting process group (SUP)

4.5.1. SUP.1 Quality Assurance

Process ID SUP.1 Process name Quality Assurance Process purpose The purpose of the Quality Assurance Process is to provide independent and objective assurance that work products and processes comply with predefined provisions and plans and that non-conformances are resolved and further prevented. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: Aspects of independence may be financial and/or organizational structure. NOTE 2: Quality assurance may be coordinated with, and make use of, the results of other processes such as verification, validation, joint review, audit and problem management. NOTE 3: Process quality assurance may include process assessments and audits, problem analysis, regular check of methods, tools, documents and the adherence to defined processes, reports and lessons learned that improve processes for future projects. NOTE 4: Work product quality assurance may include reviews, problem analysis, reports and lessons learned that improve the work products for further use.

NOTE 5: Relevant work product requirements may include requirements from applicable standards. NOTE 6: Non-conformances detected in work products may be entered into the problem resolution management process (SUP.9) to document, analyze, resolve, track to closure and prevent the problems.

NOTE 7: Relevant process goals may include goals from applicable standards. NOTE 8: Problems detected in the process definition or implementation may be entered into a process improvement process (PIM.3) to describe, record, analyze, resolve, track to closure and prevent the problems.

Output work products

|

4.5.2. SUP.2 Verification

Process ID SUP.2 Process name Verification Process purpose The purpose of the Verification Process is to confirm that each work product of a process or project properly reflects the specified requirements. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: Verification strategy is implemented through a plan. NOTE 2: Software and system verification may provide objective evidence that the outputs of a particular phase of the software development life cycle (e.g. requirements, design, implementation, testing) meet all of the specified requirements for that phase. NOTE 3: Verification methods and techniques may include inspections, peer reviews (see also SUP.4), audits, walkthroughs and analysis.

Output work products

|

4.5.3. SUP.4 Joint Review

Process ID SUP.4 Process name Joint Review Process purpose The purpose of the Joint review process is to maintain a common understanding with the stakeholders of the progress against the objectives of the agreement and what should be done to help ensure development of a product that satisfies the stakeholders. Joint reviews are at both project management and technical levels and are held throughout the life of the project. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: The following items may be addressed: Scope and purpose of the review; Products and problems to be reviewed; Entry and exit criteria; Meeting agenda; Roles and participants; Distribution list; Responsibilities; Resource and facility requirements; Used tools (checklists, scenario for perspective based reviews etc.).

Output work products

|

4.5.4. SUP.7 Documentation

Process ID SUP.7 Process name Documentation Process purpose The purpose of the Documentation Process is to develop and maintain the recorded information produced by a process. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: A documentation management strategy may define the controls needed to approve documentation for adequacy prior to issue; to review and update as necessary and re-approve documentation; to ensure that changes and the current revision status of documentation are identified; to ensure that relevant versions of documentation are available at points of issue; to ensure that documentation remain legible and readily identifiable; to ensure the controlled distribution of documentation; to prevent unintended use of obsolete documentation ; and may also specify the levels of confidentiality, copyright or disclaimers of liability for the documentation.

NOTE 2: The documentation intended for use by system and software users should accurately describe the system and software and how it is to be used in clear and useful manner for them. NOTE 3: Documentation should be checked through verification or validation process.

NOTE 4: If the documentation is part of a product baseline or if its control and stability are important, it should be modified and distributed in accordance with process SUP.8 Configuration management. Output work products

|

4.5.5. SUP.8 Configuration Management

Process ID SUP.8 Process name Configuration Management Process purpose The purpose of the Configuration Management Process is to establish and maintain the integrity of all work products of a process or project and make them available to affected parties. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: The configuration management strategy typically supports the handling of product/software variants which may be caused by different sets of application parameters or by other causes. NOTE 2: The branch management strategy specifies in which cases branching is permissible, whether authorization is required, how branches are merged, and which activities are required to verify that all changes have been consistently integrated without damage to other changes or to the original software.

NOTE 3: Configuration control is typically applied for the products that are delivered to the customer, designated internal work products, acquired products, tools and other configuration items that are used in creating and describing these work products.

NOTE 4: For baseline issues refer also to the product release process SPL.2.

NOTE 5: Regular reporting of the configuration status (e.g. how many configuration items are currently under work, checked in, tested, released, etc.) supports project management activities and dedicated project phases like software integration.

NOTE 6: A typical implementation is performing baseline and configuration management audits.

NOTE 7: Backup, storage and archiving may need to extend beyond the guaranteed lifetime of available storage media. Relevant configuration items affected may include those referenced in note 2 and note 3. Availability may be specified by contract requirements. Output work products

|

4.5.6. SUP.9 Problem Resolution Management

Process ID SUP.9 Process name Problem Resolution Management Process purpose The purpose of the Problem Resolution Management Process is to ensure that problems are identified, analyzed, managed and controlled to resolution. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: Problem resolution activities can be different during the product life cycle, e.g. during prototype construction and series development.

NOTE 2: Supporting information typically includes the origin of the problem, how it can be reproduced, environmental information, by whom it has been detected, etc. NOTE 3: Unique identification supports traceability to changes made.

NOTE 4: Problem categorization (e.g. A, B, C, light, medium, severe) may be based on severity, impact, criticality, urgency, relevance for the change process, etc.

NOTE 5: Appropriate actions may include the initiating of a change request. See SUP.10 for managing of change requests. NOTE 6: The implementation of process improvements (to prevent problems) is done in the process improvement process (PIM.3).The implementation of generic project management improvements (e.g. lessons learned) are part of the project management process (MAN.3). The implementation of generic work product related improvements are part of the quality assurance process (SUP.1).

NOTE 7: Collected data typically contains information about where the problems occurred, how and when they were found, what were their impacts, etc. Output work products

|

4.5.7. SUP.10 Change Request Management

Process ID SUP.10 Process name Change Request Management Process purpose The purpose of the Change Request Management Process is to ensure that change requests are managed, tracked and implemented Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: A status model for change requests may contain: open, under investigation, approved for implementation, allocated, implemented, fixed, closed, etc. NOTE 2: Typical analysis criteria are: resource requirements, scheduling issues, risks, benefits, etc. NOTE 3: Change request activities ensure that change requests are systematically identified, described, recorded, analyzed, implemented, and managed. NOTE 4: The change request management strategy may cover different proceedings across the product life cycle, e.g. during prototype construction and series development.

NOTE 5: A Change Control Board (CCB) is a common mechanism used to approve change requests. NOTE 6: Prioritization of change requests may be done by allocation to releases.

NOTE 7: Bidirectional traceability supports consistency, completeness and impact analysis. Output work products

|

4.6. Management process group (MAN)

4.6.1. MAN.3 Project Management

Process ID MAN.3 Process name Project Management Process purpose The purpose of the Project Management Process is to identify, establish, and control the activities and resources necessary for a project to produce a product, in the context of the project’s requirements and constraints. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: This typically means that the project life cycle and the customer’s development process are consistent with each other.

NOTE 2: A structure and a manageable size of the activities and related work packages support an adequate progress monitoring. NOTE 3: Project activities typically cover engineering, management and supporting processes.

NOTE 4: Appropriate estimation methods should be used. NOTE 5: Examples of necessary resources are people, infrastructure (such as tools, test equipment, communication mechanisms…) and hardware/materials. NOTE 6: Project risks (using MAN.5) and quality criteria (using SUP.1) may be considered. NOTE 7: Estimations and resources typically include engineering, management and supporting processes.

NOTE 8: In the case of deviations from required skills and knowledge trainings are typically provided.

NOTE 9: Project interfaces relate to engineering, management and supporting processes.

NOTE 10: This relates to all engineering, management and supporting processes.

NOTE 11: Project reviews may be executed at regular intervals by the management. At the end of a project, a project review contributes to identifying e.g. best practices and lessons learned. Output work products

|

4.6.2. MAN.5 Risk Management

Process ID MAN.5 Process name Risk Management Process purpose The purpose of the Risk Management Process is to identify, analyze, treat and monitor the risks continuously. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: Risks may include technical, economic and timing risks.

NOTE 2: Examples of risk areas that are typically analyzed for potential risk reasons or risks factors include: cost, schedule, effort, resource, and technical. NOTE 3: Examples of risk factors may include: unsolved and solved trade-offs, decisions of not implementing a project feature, design changes, lack of expected resources.

NOTE 4: Risks are normally analyzed to determine their probability, consequence and severity. NOTE 5: Different techniques may be used to analyze a system in order to understand if risks exist, for example, functional analysis, simulation, FMEA, FTA etc.

NOTE 6: Major risks may need to be communicated to and monitored by higher levels of management.

NOTE 7: Corrective actions may involve developing and implementing new mitigation strategies or adjusting the existing strategies. Output work products

|

4.6.3. MAN.6 Measurement

Process ID MAN.6 Process name Measurement Process purpose The purpose of the Measurement Process is to collect and analyze data relating to the products developed and processes implemented within the organization and its projects, to support effective management of the processes and to objectively demonstrate the quality of the products. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: Information products are produced as a result analysis of data in order to summarize and communicate information.

Output work products

|

4.7. Process improvement process group (PIM)

4.7.1. PIM.3 Process Improvement

Process ID PIM.3 Process name Process Improvement Process purpose The purpose of the Process Improvement Process is to continually improve the organization’s effectiveness and efficiency through the processes used and aligned with the business need. Process outcomes As a result of successful implementation of this process:

Base practices

NOTE 1: The process improvement process is a generic process, which can be used at all levels (e.g. organizational level, process level, project level, etc.) and which can be used to improve all other processes. NOTE 2: Commitment at all levels of management may support process improvement. Personal goals may be set for the relevant managers to enforce management commitment.