Automotive SPICE®

Process Reference Model

Process Assessment Model

Version 4.0

Title: |

Automotive SPICE Process Assessment / Reference Model |

Author(s): |

VDA Working Group 13 |

Version: |

4.0 |

Date: |

2023-11-29 |

Status: |

Released |

Copyright notice

This document is a revision of the Automotive SPICE process assessment model and process reference model 3.1, which has been developed by the Working Group 13 of the Quality Management Center (QMC) in the German Association of the Automotive Industry.

This document reproduces relevant material from:

ISO/IEC 33020:2019

Information technology – Process assessment – Process measurement framework for assessment of process capability

ISO/IEC 33020:2019 provides the following copyright release statement:

‘Users of this International Standard may reproduce subclauses 5.2, 5.3, 5.4 and 5.6 as part of any process assessment model or maturity model so that it can be used for its intended purpose.’

ISO/IEC 15504-5:2006

Information Technology – Process assessment – Part 5: An exemplar Process Assessment Model

ISO/IEC 15504-5:2006 provides the following copyright release statement:

‘Users of this part of ISO/IEC 15504 may freely reproduce the detailed descriptions contained in the exemplar assessment model as part of any tool or other material to support the performance of process assessments, so that it can be used for its intended purpose.’

Relevant material from one of the mentioned standards is incorporated under the copyright release notice.

Acknowledgement

The VDA, the VDA QMC and the Project Group 13 explicitly acknowledge the high-quality work carried out by the members of the intacs® working groups. We would like to thank all involved people who have contributed to the development and publication of Automotive SPICE®.

Derivative works

You may not alter, transform, or build upon this work without the prior consent of the VDA Quality Management Center. Such consent may be given provided ISO copyright is not infringed.

The detailed descriptions contained in this document may be incorporated as part of any tool or other material to support the performance of process assessments, so that this process assessment model can be used for its intended purpose, provided that any such material is not offered for sale.

All distribution of derivative works shall be made at no cost to the recipient.

Document distribution

The Automotive SPICE® process assessment model may only be obtained by download from the www.vda-qmc.de web site. It is not permitted for the recipient to further distribute the document.

Change requests

Any problems or change requests should be reported through the defined mechanism at the www.vda-qmc.de web site.

Trademark notice

Automotive SPICE® is a registered trademark of the Verband der Automobilindustrie e.V. (VDA) For further information about Automotive SPICE® visit www.vda-qmc.de.

Document history

Version |

Date |

By |

Notes |

|---|---|---|---|

2.0 |

2005-05-04 |

AutoSIG / SUG |

DRAFT RELEASE, pending final editorial review |

2.1 |

2005-06-24 |

AutoSIG / SUG |

Editorial review comments implemented Updated to reflect changes in FDIS 15504-5 |

2.2 |

2005-08-21 |

AutoSIG / SUG |

Final checks implemented: FORMAL RELEASE |

2.3 |

2007-05-05 |

AutoSIG / SUG |

Revision following CCB: FORMAL RELEASE |

2.4 |

2008-08-01 |

AutoSIG / SUG |

Revision following CCB: FORMAL RELEASE |

2.5 |

2010-05-10 |

AutoSIG / SUG |

Revision following CCB: FORMAL RELEASE |

3.0 |

2015-07-16 |

VDA QMC WG13 |

Changes: See release notes |

3.1 |

2017-11-01 |

VDA QMC WG13 |

Changes: See www.automotivespice.com |

4.0 |

2023-10-20 |

VDA QMC WG13 |

Complete revision of PAM |

Table of contents

3. Process capability determination 15

3.1. Process reference model 16

3.1.1. Primary life cycle processes category 17

3.1.2. Supporting life cycle processes category 20

3.1.3. Organizational life cycle processes category 20

3.2.1. Process capability levels and process attributes 21

3.2.2. Process attribute rating 23

3.2.3. Rating and aggregation method 24

3.2.4. Process capability level model 26

3.3. Process assessment model 27

3.3.1. Assessment indicators 28

3.3.2. Understanding information Items and work products 29

3.3.3. Understanding the level of abstraction of a PAM 31

3.3.4. Why a PRM and PAM are not a lifecycle model or development process blueprint 31

4. Process reference model and performance indicators (Level 1) 33

4.1. Acquisition process group (ACQ) 34

4.1.1. ACQ.4 Supplier Monitoring 34

4.2. Supply process group (SPL) 37

4.2.1. SPL.2 Product Release 37

4.3. System engineering process group (SYS) 40

4.3.1. SYS.1 Requirements Elicitation 40

4.3.2. SYS.2 System Requirements Analysis 43

4.3.3. SYS.3 System Architectural Design 46

4.3.4. SYS.4 System Integration and Integration Verification 49

4.3.5. SYS.5 System Verification 52

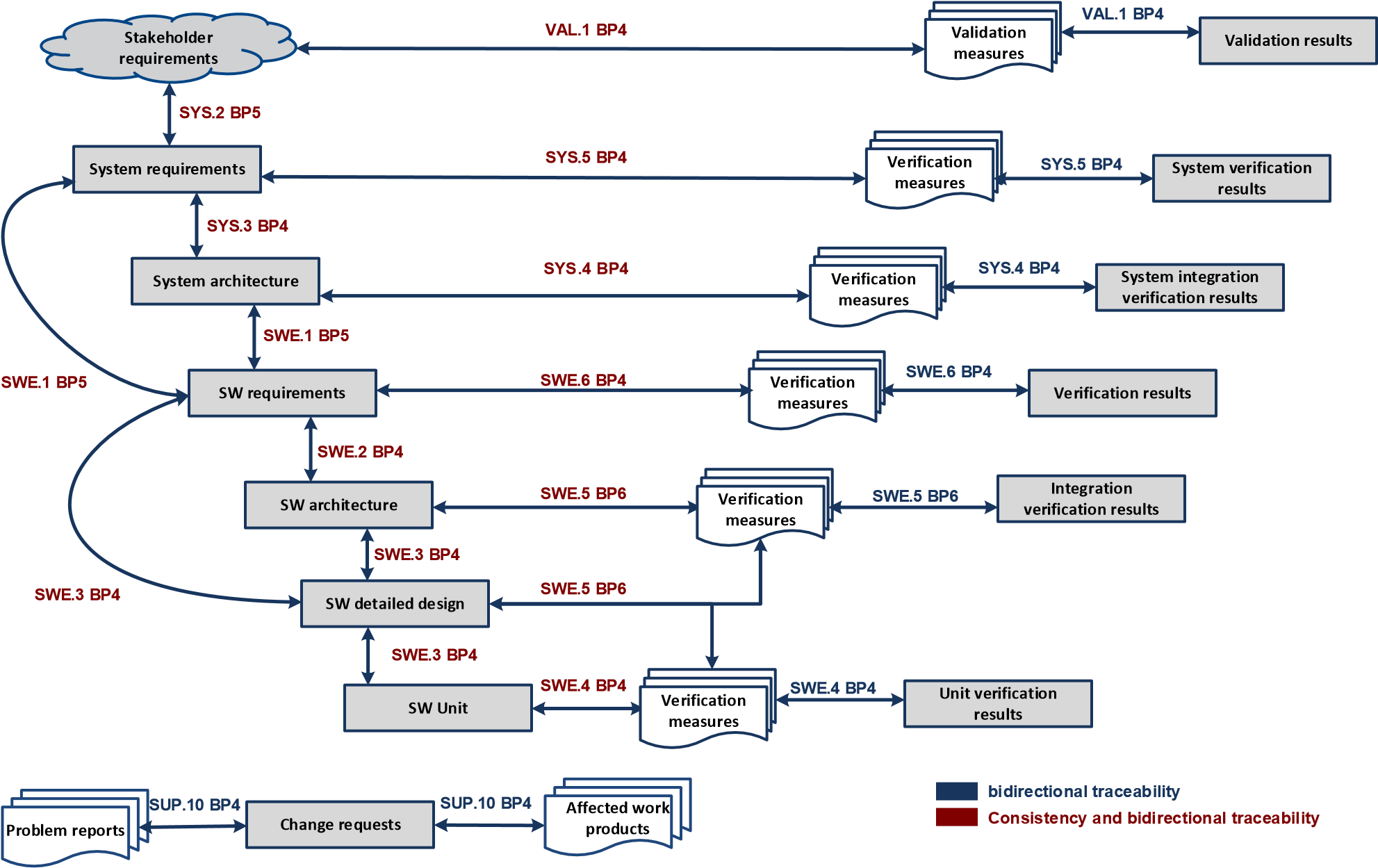

4.4. Software engineering process group (SWE) 55

4.4.1. SWE.1 Software Requirements Analysis 55

4.4.2. SWE.2 Software Architectural Design 58

4.4.3. SWE.3 Software Detailed Design and Unit Construction 61

4.4.4. SWE.4 Software Unit Verification 64

4.4.5. SWE.5 Software Component Verification and Integration Verification 67

4.4.6. SWE.6 Software Verification 71

4.5. Validation process group (VAL) 74

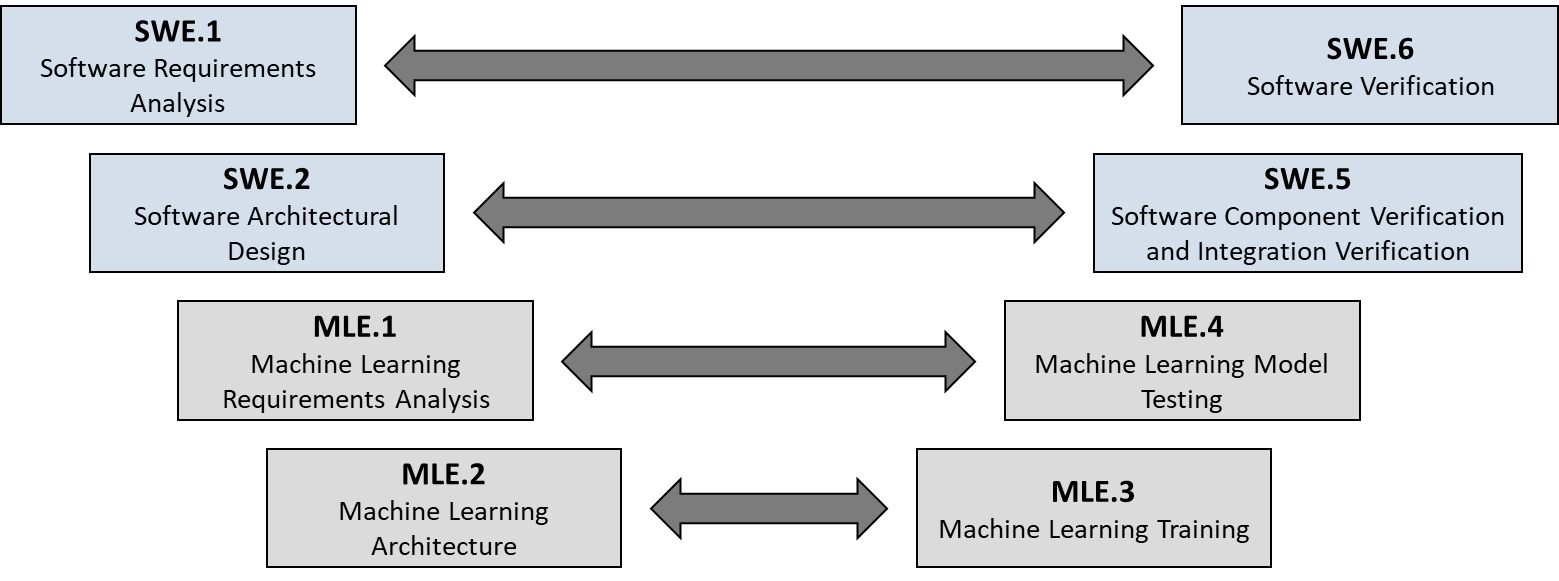

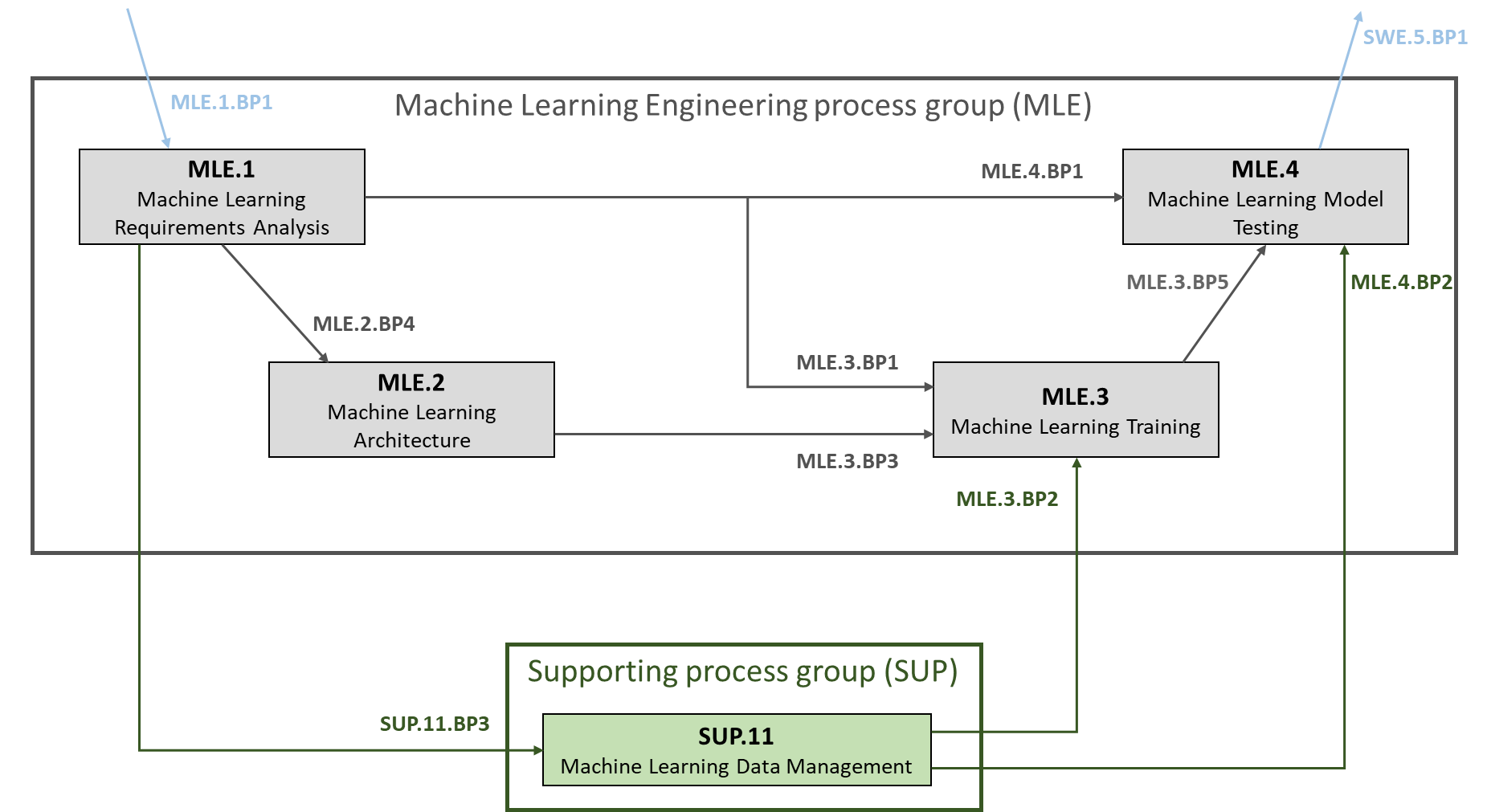

4.6. Machine Learning Engineering process group (MLE) 77

4.6.1. MLE.1 Machine Learning Requirements Analysis 77

4.6.2. MLE.2 Machine Learning Architecture 80

4.6.3. MLE.3 Machine Learning Training 83

4.6.4. MLE.4 Machine Learning Model Testing 86

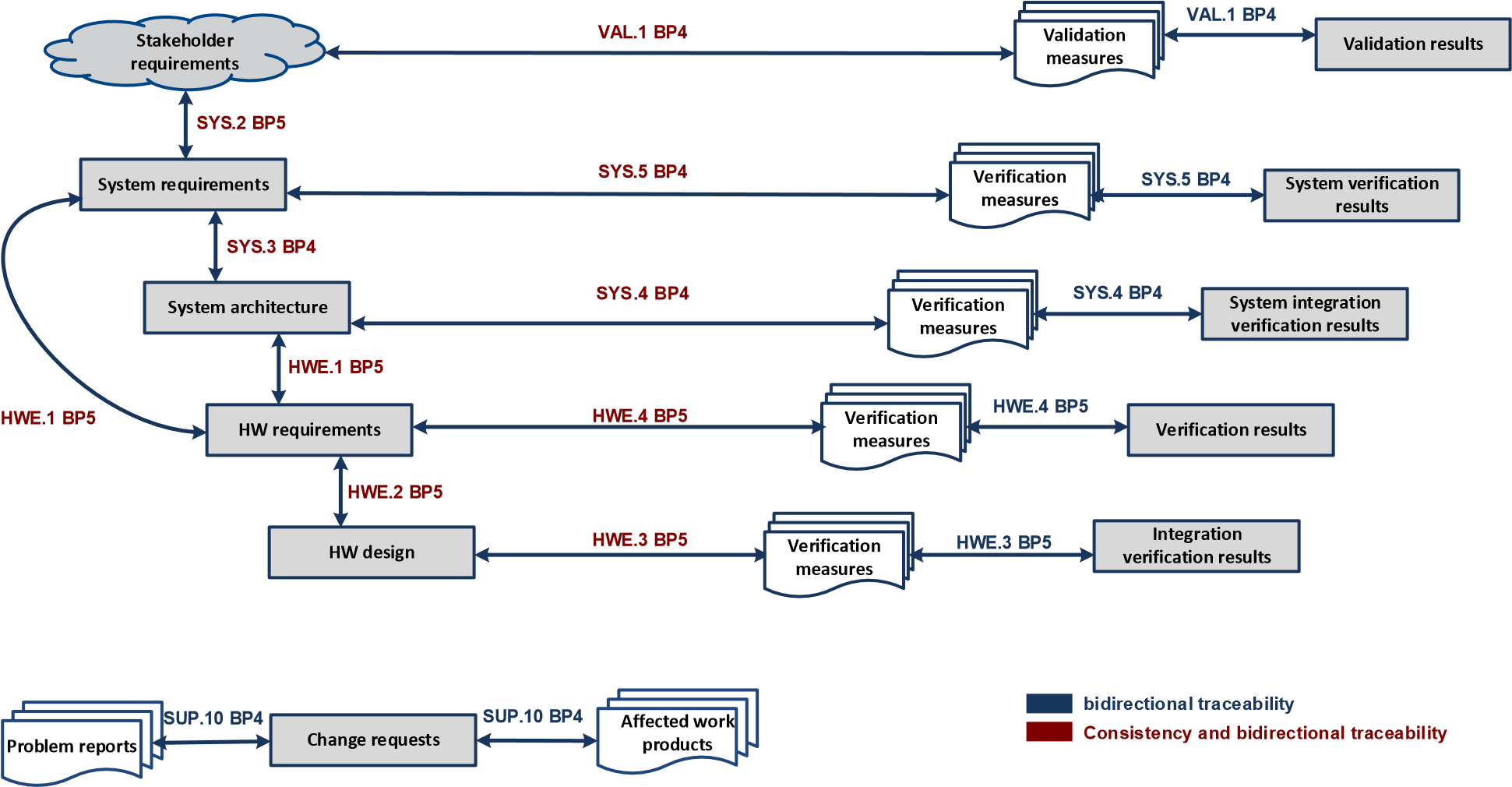

4.7. Hardware Engineering process group (HWE) 90

4.7.1. HWE.1 Hardware Requirements Analysis 90

4.7.2. HWE.2 Hardware Design 94

4.7.3. HWE.3 Verification against Hardware Design 97

4.7.4. HWE.4 Verification against Hardware Requirements 100

4.8. Supporting process group (SUP) 103

4.8.1. SUP.1 Quality Assurance 103

4.8.2. SUP.8 Configuration Management 106

4.8.3. SUP.9 Problem Resolution Management 110

4.8.4. SUP.10 Change Request Management 113

4.8.5. SUP.11 Machine Learning Data Management 116

4.9. Management process group (MAN) 119

4.9.1. MAN.3 Project Management 119

4.9.2. MAN.5 Risk Management 123

4.10. Process improvement process group (PIM) 129

4.10.1. PIM.3 Process Improvement 129

4.11. Reuse process group (REU) 132

4.11.1. REU.2 Management of Products for Reuse 132

5. Process capability levels and process attributes 135

5.1. Process capability level 0: Incomplete process 135

5.2. Process capability Level 1: Performed process 135

5.2.1. PA 1.1 Process performance process attribute 136

5.3. Process capability Level 2: Managed process 136

5.3.1. PA 2.1 Process performance management process attribute 137

5.3.2. PA 2.2 Work product management process attribute 141

5.4. Process capability Level 3: Established process 143

5.4.1. PA 3.1 Process definition process attribute 144

5.4.2. PA 3.2 Process deployment process attribute 147

5.5. Process capability Level 4: Predictable process 150

5.5.1. PA 4.1 Quantitative analysis process attribute 150

5.5.2. PA 4.2 Quantitative control process attribute 153

5.6. Process capability Level 5: Innovating process 155

5.6.1. PA 5.1 Process innovation process attribute 155

5.6.2. PA 5.2 Process innovation implementation process attribute 157

Annex A Conformity statements 160

Annex A.2 Conformance to the requirements for process reference models 160

Annex A.3 Conformance to the requirements for process assessment models 160

Annex A.4 Conformance to the requirements for measurement frameworks 162

Annex B Information Item Characteristics 163

Annex C Key concepts and guidance 185

Annex C.1 The “Plug-in” concept 185

Annex C.2 “Element”, “Component”, and “Unit” 186

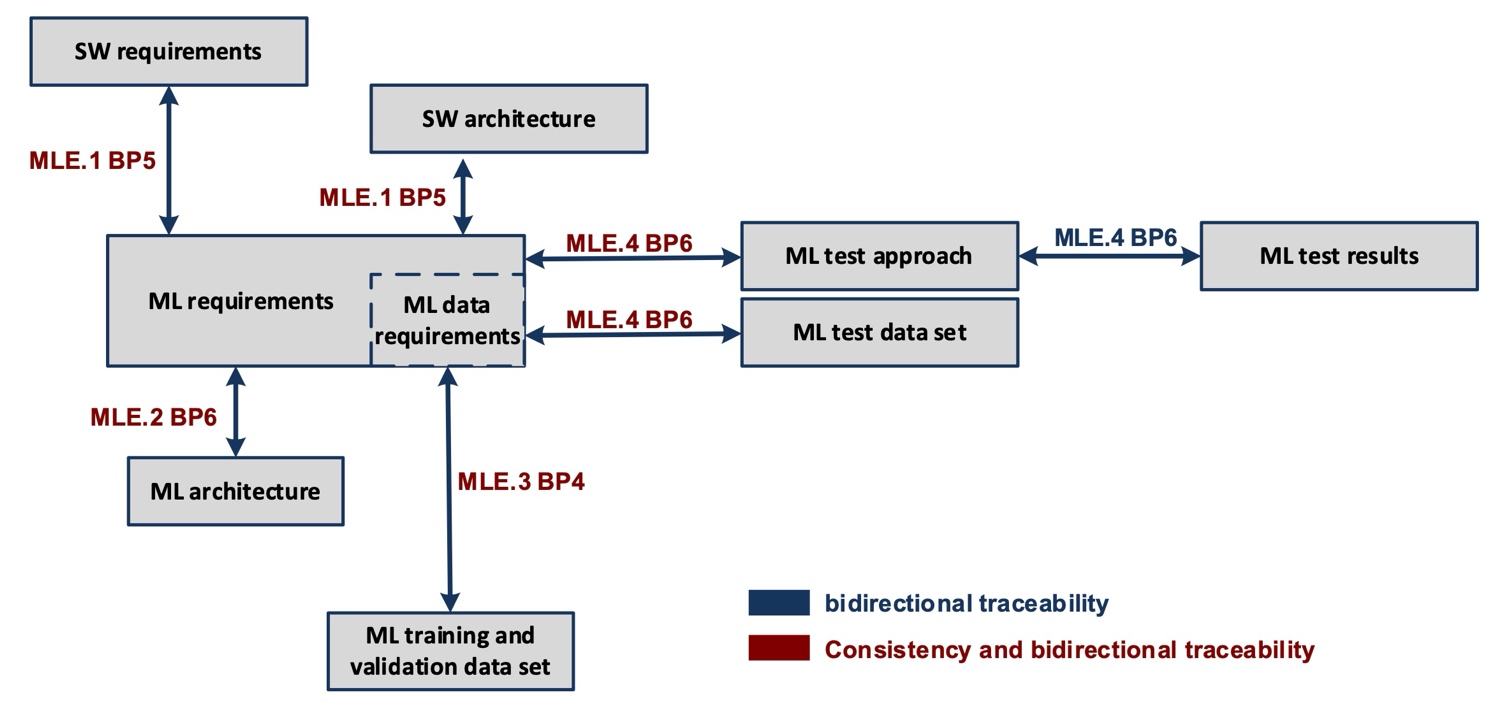

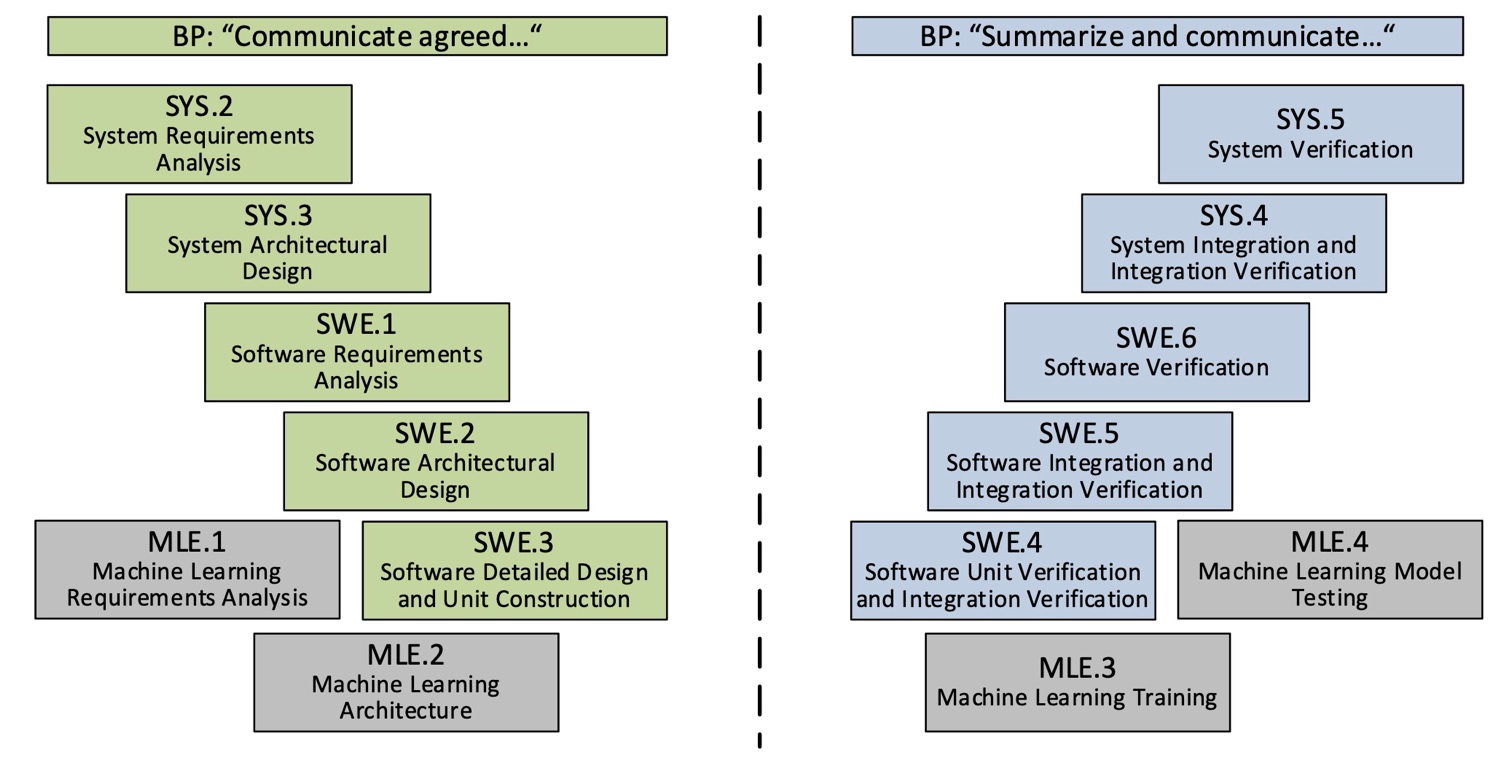

Annex C.3 Integration of Machine Learning Engineering Processes 187

Annex C.4 Example of an ML Architecture 189

Annex C.5 Traceability and consistency 190

Annex C.6 “Agree” and “Summarize and Communicate” 192

Annex C.7 Key Changes in Automotive SPICE 4.0 193

Terminology – “Measure” vs. “Metric” 193

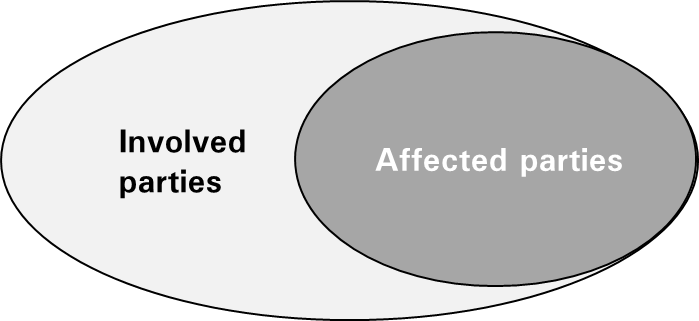

Terminology – “Affected Party” (Level 1) vs. “Involved Party” (Level 2) 193

List of Figures

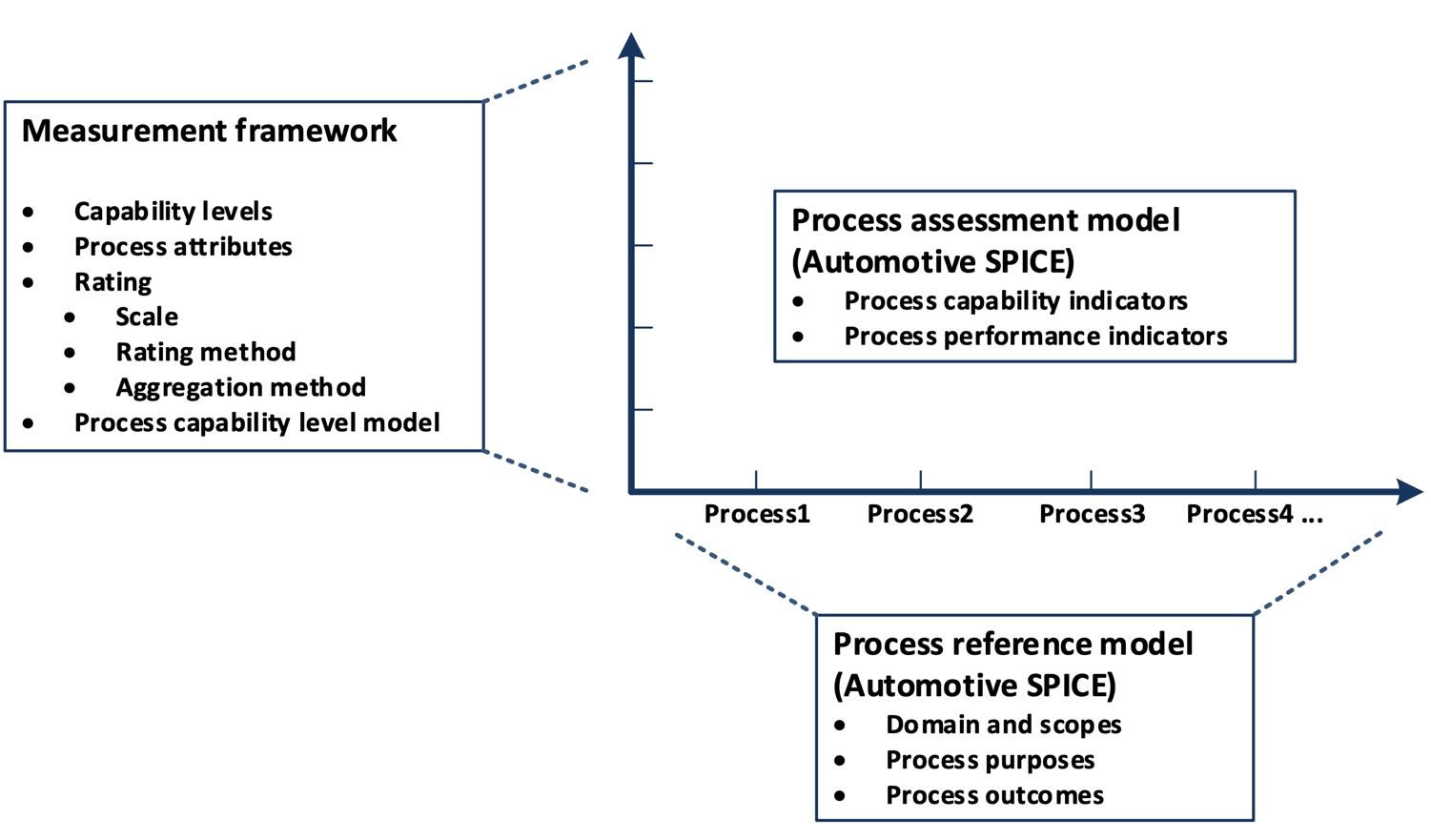

Figure 1 — Process assessment model relationship 15

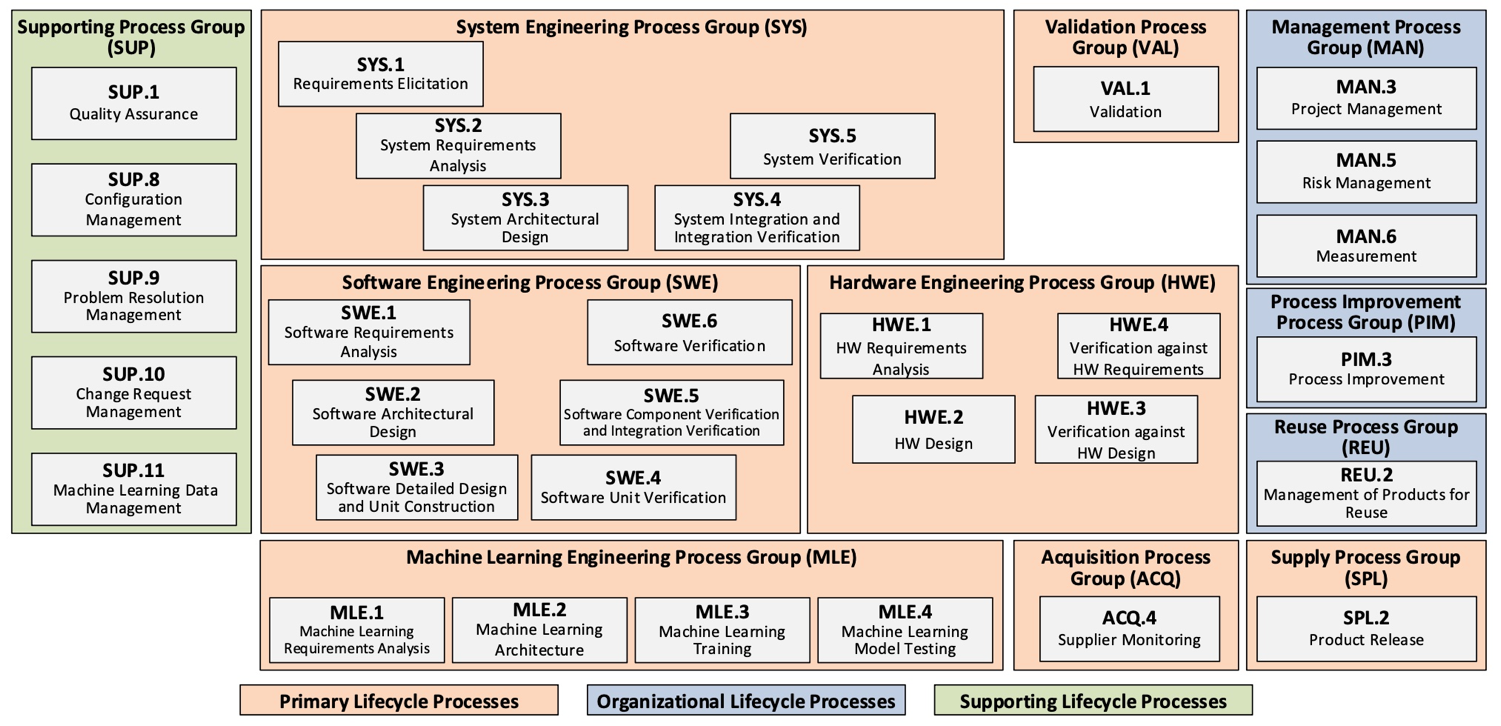

Figure 2 — Automotive SPICE process reference model – Overview 16

Figure 3 — Automotive SPICE process reference model – Overview4 16

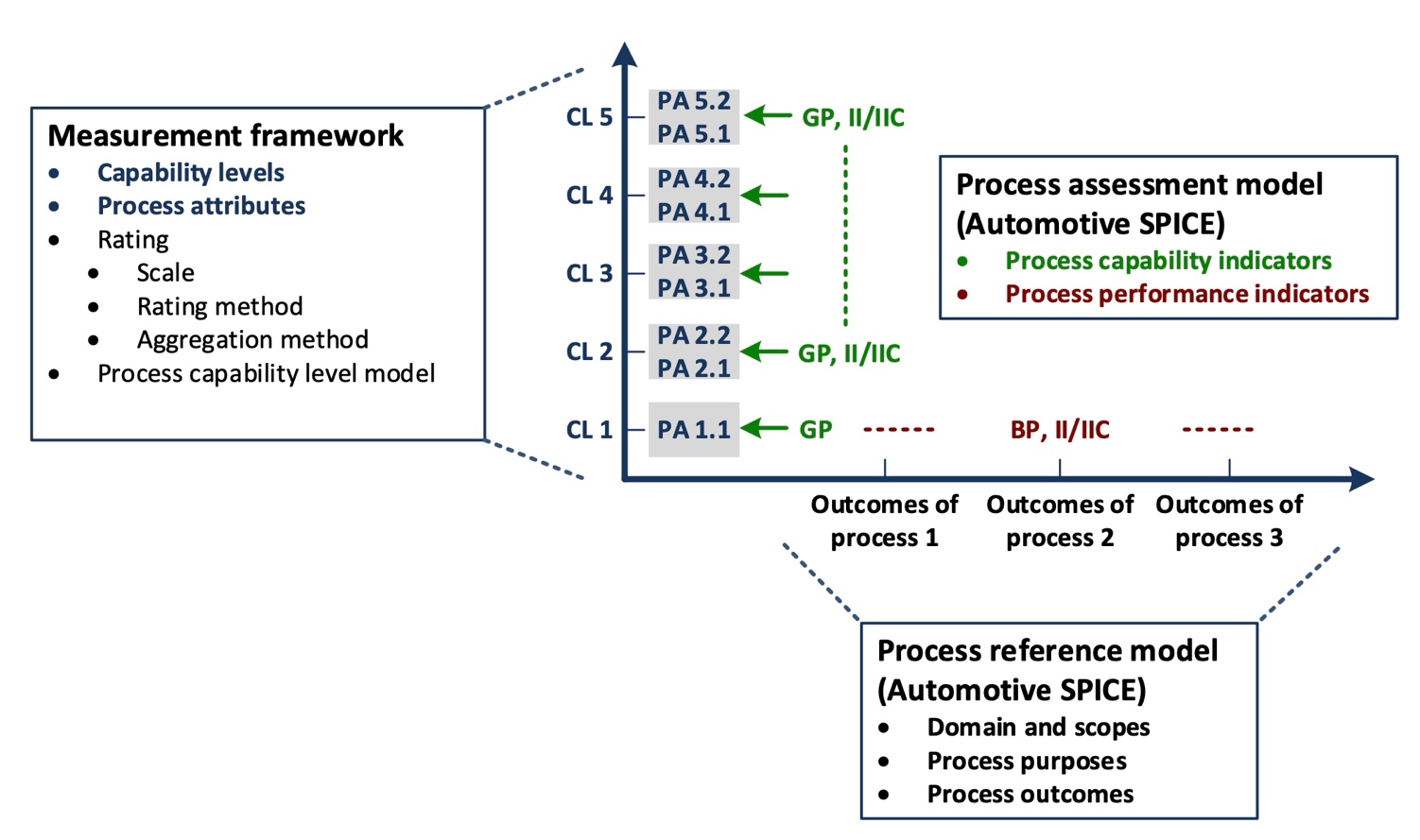

Figure 4 — Relationship between assessment indicators and process capability 29

Figure 5 — Possible levels of abstraction for the term “process” 31

Figure 6 — Performing a process assessment for determining process capability 32

List of Tables

Table 2 — Abbreviation List 14

Table 3 — Primary life cycle processes – ACQ process group 18

Table 4 — Primary life cycle processes – SPL process group 18

Table 5 — Primary life cycle processes – SYS process group 18

Table 6 — Primary life cycle processes – VAL process group 19

Table 7 — Primary life cycle processes – SWE process group 19

Table 8 — Primary life cycle processes – MLE process group 19

Table 9 — Primary life cycle processes – HWE process group 19

Table 10 — Supporting life cycle processes - SUP process group 20

Table 11 — Organizational life cycle processes - MAN process group 20

Table 12 — Organizational life cycle processes - PIM process group 20

Table 13 — Organizational life cycle processes - REU process group 20

Table 14 — Process capability levels 21

Table 15 — Process attributes 22

Table 17 — Rating scale percentage values 23

Table 18 — Refinement of rating scale 24

Table 19 — Refined rating scale percentage values 24

Table 20 — Capability levels and corresponding process attribute ratings 27

Table 21 — Template for the process description 33

Table B. 1 — Structure of information item characteristics (IIC) table 163

1. Introduction

1.1. Scope

Process assessment is a disciplined evaluation of an organizational unit’s processes against a process assessment model.

The Automotive SPICE process assessment model (PAM) is intended for use when performing conformant assessments of the process capability on the development of embedded automotive systems. It was developed in accordance with the requirements of ISO/IEC 33004:2015.

Automotive SPICE has its own process reference model (PRM), which was developed based on the Automotive SPICE process reference model 4.5. It was further developed and tailored considering the specific needs of the automotive industry. If processes beyond the scope of Automotive SPICE are needed, appropriate processes from other process reference models such as ISO/IEC 12207 or ISO/IEC/IEEE 15288 may be added based on the business needs of the organization.

The PRM is incorporated in this document and is used in conjunction with the Automotive SPICE process assessment model when performing an assessment.

This Automotive SPICE process assessment model contains a set of indicators to be considered when interpreting the intent of the Automotive SPICE process reference model. These indicators may also be used when implementing a process improvement program.

1.2. Terminology

Automotive SPICE follows the following precedence for use of terminology:

ISO/IEC 33001 for assessment related terminology

ISO/IEC/IEEE 24765, ISO/SAE 21434 and ISO/IEC/IEEE 29119 terminology (as contained in Annex C)

Terms introduced by Automotive SPICE (as contained in Annex C)

PMBOK® Guide – Fourth Edition

PAS 1883:2020

Term |

Origin |

Description |

|---|---|---|

Activity |

Automotive SPICE V4.0 |

Execution of a task by a stakeholder or an involved party. |

Application parameter |

Automotive SPICE V4.0 |

An application parameter is a software variable containing data that can be changed at the system or software levels; they influence the system’s or software behavior and properties. The notion of application parameter is expressed in two ways:

Application parameters are not requirements. They are a technical implementation solution for configurability-oriented requirements. |

Approval |

Automotive SPICE V4.0 |

Written statement that a deliverable is fit for its intended use, and compliant with defined criteria. |

Baseline |

Automotive SPICE V4.0 |

A defined and coherent set of read-only information, serving as an input information the for affected parties. |

Deliverable |

PMBOK® Guide – Fourth Edition |

Any unique and verifiable product, result, or capability to perform a service that must be produced to complete a process, phase, or project. Often used more narrowly in reference to an external deliverable, which is a deliverable that is subject to approval by the project sponsor or customer. |

Functional requirement |

ISO/IEC/IEEE 24765 |

A statement that identifies what a product or process must accomplish to produce required behavior and/or results. |

Hardware |

intacs® working group HW PAM |

Assembled and interconnected electrical or electronic hardware components or parts which perform analog or digital functions or operations. |

Hardware component |

intacs® working group HW PAM |

Logical (e.g., functional block) or physical group of hardware parts realizing a functionality, which

|

Hardware element |

intacs® working group HW PAM |

Generic term; can represent a hardware component, a hardware part, a hardware interface, or the hardware. |

Hardware part |

Automotive SPICE V4.0 |

Fundamental HW element the purpose and functionality of which cannot be further subdivided or separated.

|

Hyperparameter |

Automotive SPICE V4.0 |

In machine learning, a hyperparameter is a parameter whose value is used to control the training of the ML model. Its value must be set between training iterations. Examples: learning rate, loss function, model depth, regularization constants. |

Information need |

Automotive SPICE V4.0 |

The need for characterizing process or product related effectiveness and efficiency (used by MAN.6 and PA 4.1). |

Machine Learning (ML) |

Automotive SPICE V4.0 |

In Automotive SPICE Machine Learning (ML) describes the ability of software to learn from specific training data and to apply this knowledge to other similar tasks. |

Measure |

Automotive SPICE V4.0 |

An activity to achieve a certain intent. |

Measurement |

Oxford Dictionary |

“The activity to find the size, quantity or degree of something”. |

Metric |

Automotive SPICE V4.0 |

A quantitative or qualitative measurable indicator that matches defined information needs. |

Operational Design Domain |

PAS 1883:2020 |

Operational Design Domain (ODD) is operating conditions under which a given overall system or feature thereof is specifically designed to function. This includes, but is not limited to, environmental, geographical, and time-of-day restrictions, and/or the requisite presence or absence of certain traffic or roadway characteristics. |

Project |

ISO/IEC/IEEE 24765 |

Endeavor with defined start and finish dates undertaken to create a product or service in accordance with specified resources and requirements. |

Release |

Automotive SPICE V4.0 |

A physical product delivered to a customer, including a defined set of functionalities and properties. |

Regression verification |

Automotive SPICE V4.0 |

Selective re-verification of elements to verify that modifications have not caused unintended effects. |

Risk |

ISO/IEC/IEEE 24765 |

The combination of the probability of occurrence and the consequences of a given future undesirable event. |

Software component |

Automotive SPICE V4.0 |

Software component in design and implementation-oriented processes: The software architecture decomposes the software into software components across appropriate hierarchical levels down to the lowest-level software components in a conceptual model. Software component in verification-oriented processes: The implementation of a SW component under verification is represented e.g., as source code, object files, library file, executable, or executable model. |

Software element |

Automotive SPICE V4.0 |

Refers to software component or software unit |

Software unit |

Automotive SPICE V4.0 |

Software unit in design and implementation-oriented processes: As a result of the decomposition of a software component, the software is decomposed into software units which are a representation of a software element, which is decided not to be further subdivided and that is a part of a software component at the lowest level, in a conceptual model. Software unit in verification-oriented processes: An implemented SW unit under verification is represented e.g., as source code files, or an object file. |

Stakeholder requirements |

Automotive SPICE V4.0 |

Any type of requirement for the stakeholders in the given context, e.g., customer requirement, supplier internal requirements (product-specific, platform etc.), legal requirements, regulatory requirements, statutory requirements, industry sector requirements, international standards, codes of practice etc. … |

System Element |

Automotive SPICE V4.0 |

System elements can be:

|

Task |

Automotive SPICE V4.0 |

A definition, but not the execution, of a coherent and set of atomic actions. |

Validation measure |

Automotive SPICE V4.0 |

Validation measure can be:

|

Verification |

Automotive SPICE V4.0 |

Verification is confirmation through the provision of objective evidence that an element fulfils the specified requirements. |

Verification measure |

Automotive SPICE V4.0 |

Verification measure can be:

Note, that in particular domains certain verification measures may not be applicable, e.g., software units generally cannot be verified by means of calculations or analyses. |

1.3. Abbreviations

BP |

Base Practice |

CAN |

Controller Area Network |

CASE |

Computer-Aided Software Engineering, |

CCB |

Change Control Board |

CPU |

Central Processing Unit |

ECU |

Electronic Control Unit |

EEPROM |

Electrically Erasable Programmable Read Only Memory |

EOL |

End-of-Line |

FMEA |

Failure Mode and Effect Analysis |

FTA |

Fault Tree Analysis |

GP |

Generic Practice |

GR |

Generic Resource |

IEC |

International Electrotechnical Commission |

IEEE |

Institute of Electrical and Electronics Engineers |

I/O |

Input / Output |

ISO |

International Organization for Standardization |

LIN |

Local Interconnect Network |

MISRA |

Motor Industry Software Reliability Association |

MOST |

Media Oriented Systems Transport |

ODD |

Operational Design Domain |

PA |

Process Attribute |

PAM |

Process Assessment Model |

PRM |

Process Reference Model |

PWM |

Pulse Width Modulation |

RAM |

Random Access Memory |

ROM |

Read Only Memory |

SPICE |

Systems Process Improvement and Capability dEtermination |

SUG |

Spice User Group |

USB |

Universal Serial Bus |

WP |

Work Product |

WPC |

Work Product Characteristic |

2. Statement of compliance

The Automotive SPICE process reference model and process assessment model are conformant with the ISO/IEC 33004:2015 and can be used as the basis for conducting an assessment of process capability.

An ISO/IEC 33003:2015 compliant Measurement Framework is defined in section 5.

A statement of compliance of the process assessment model and process reference model with the requirements of ISO/IEC 33004:2015 is provided in Annex A.

A statement of compliance of the measurement framework with the requirements of ISO/IEC 33003:2015 is provided in Annex A.

3. Process capability determination

The concept of process capability determination by using a process assessment model is based on a two-dimensional framework. The first dimension is provided by processes defined in a process reference model (process dimension). The second dimension consists of capability levels that are further subdivided into process attributes (capability dimension). The process attributes provide the measurable characteristics of process capability.

The process assessment model selects processes from a process reference model and supplements with indicators. These indicators support the collection of objective evidence which enable an assessor to assign ratings for processes according to the capability dimension.

The relationship is shown in Figure 1.

Figure 1 — Process assessment model relationship

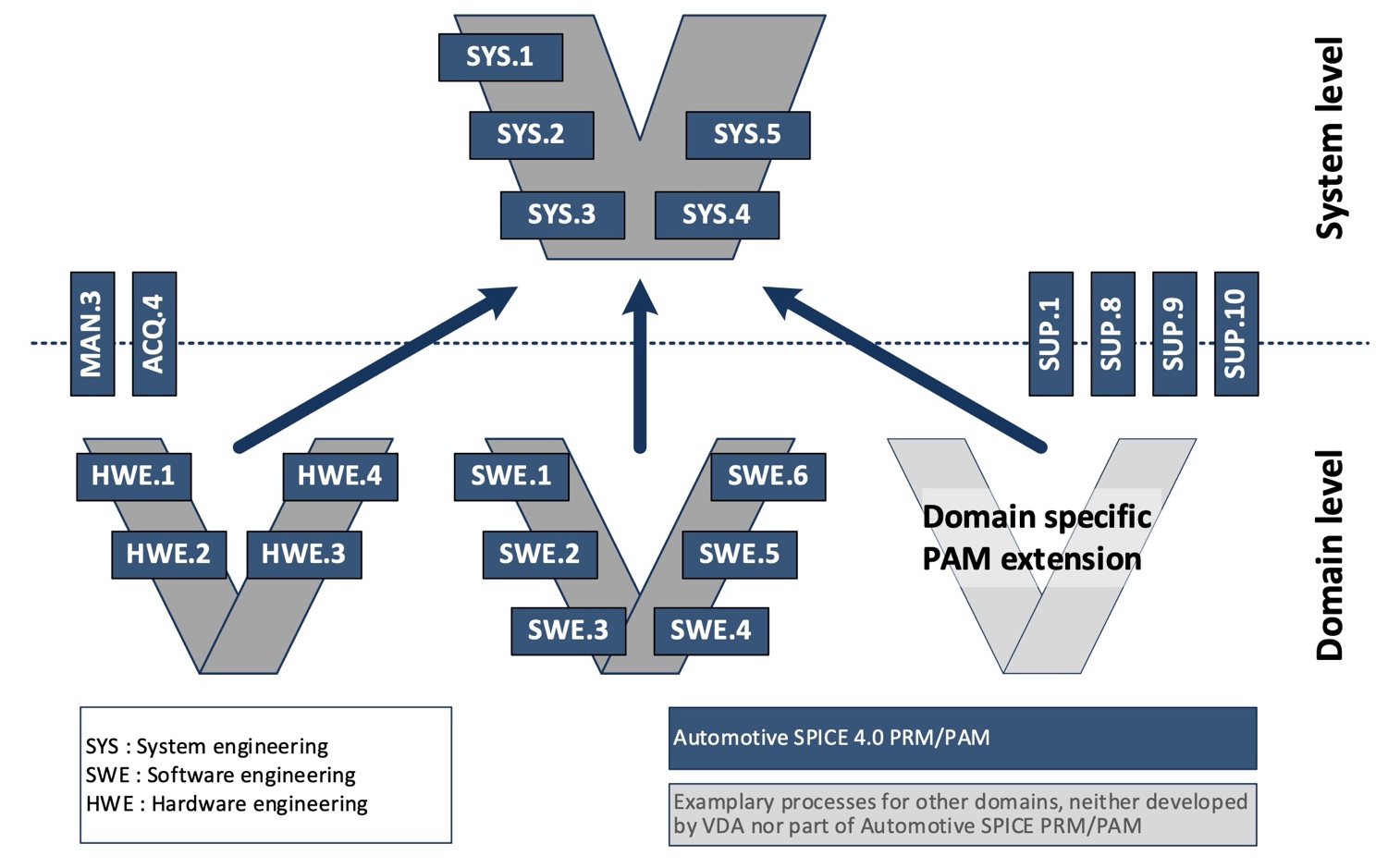

3.1. Process reference model

Processes are collected into process groups according to the domain of activities they address.

These process groups are organized into 3 process categories: Primary life cycle processes, Organizational life cycle processes and Supporting life cycle processes.

For each process a purpose statement is formulated that contains the unique functional objectives of the process when performed in a particular environment. For each purpose statement a list of specific outcomes is associated, as a list of expected positive results of the process performance.

For the process dimension, the Automotive SPICE process reference model provides the set of processes shown in Figure 2.

Figure 2 — Automotive SPICE process reference model – Overview

3.1.1. Primary life cycle processes category

The primary life cycle processes category consists of processes that may apply for an acquirer of products from a supplier or may apply for product development when responding to stakeholder needs and delivering products including the engineering processes needed for specification, design, implementation, integration and verification.

The primary life cycle processes category consists of the following groups:

the Acquisition process group

the Supply process group

the System engineering process group

the Validation process group

the Software engineering process group

the Machine learning engineering process group

the Hardware engineering process group

The Acquisition process group (ACQ) consists of one process that is performed by the customer, or by the supplier when acting as a customer for its own suppliers, in order to acquire a product and/or service.

ACQ.4 |

Supplier Monitoring |

The Supply process group (SPL) consists of one process performed by the supplier in order to supply a product and/or a service.

SPL.2 |

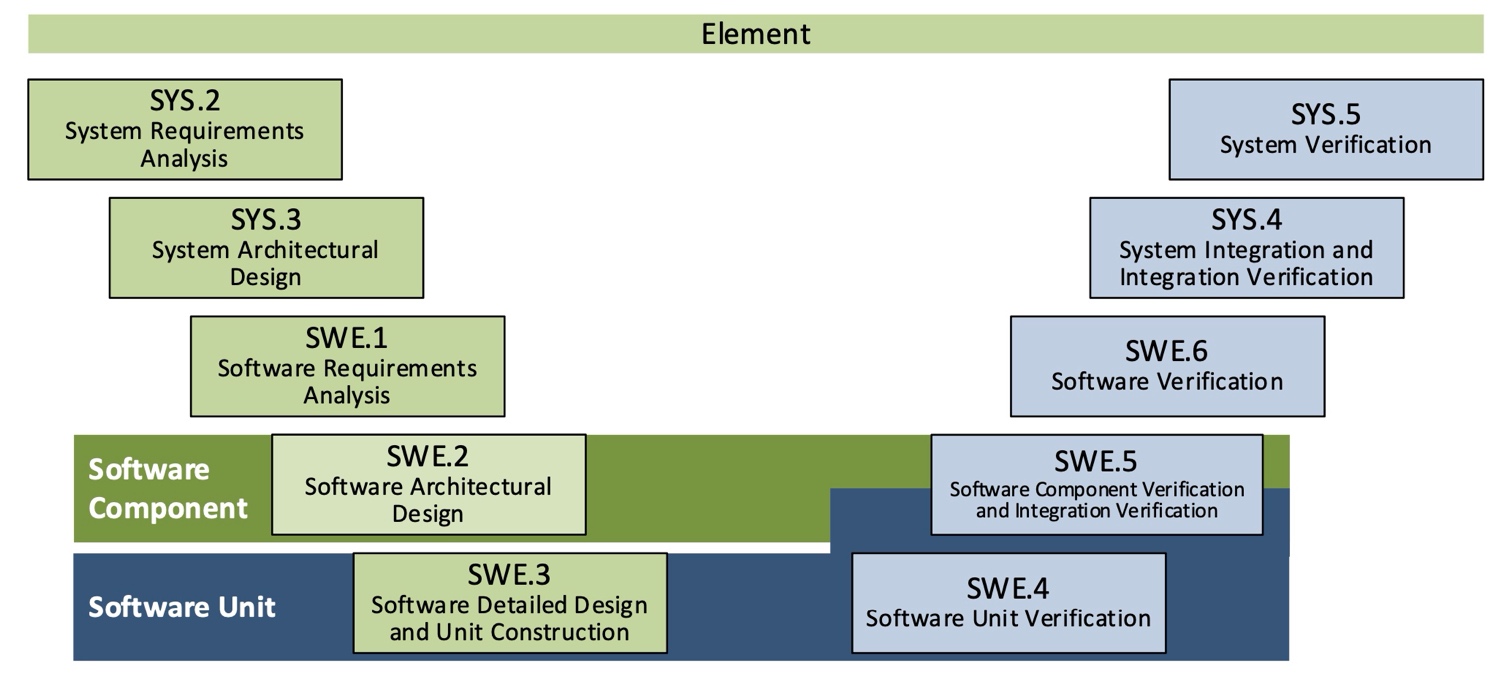

Product Release |

The System Engineering process group (SYS) consists of processes addressing the elicitation and management of customer and internal requirements, the definition of the system architecture and the integration and verification on the system level.

SYS.1 |

Requirements Elicitation |

SYS.2 |

System Requirements Analysis |

SYS.3 |

System Architectural Design |

SYS.4 |

System Integration and Integration Verification |

SYS.5 |

System Verification |

The Validation process group (VAL) consists of one process that is performed to provide evidence that the product to be delivered satisfies the expectations for its intended use.

VAL.1 |

Validation |

The Software Engineering process group (SWE) consists of processes addressing the management of software requirements derived from the system requirements, the development of the corresponding software architecture and design as well as the implementation, integration and verification of the software.

SWE.1 |

Software Requirements Analysis |

SWE.2 |

Software Architectural Design |

SWE.3 |

Software Detailed Design and Unit Construction |

SWE.4 |

Software Unit Verification |

SWE.5 |

Software Component Verification and Integration Verification |

SWE.6 |

Software Verification |

The Machine Learning Engineering process group (MLE) consists of processes addressing the management of ML requirements derived from the software requirements, the development of the corresponding ML architecture, the training of ML model, and testing of ML model against ML requirements.

MLE.1 |

Machine Learning Requirements Analysis |

MLE.2 |

Machine Learning Architecture |

MLE.3 |

Machine Learning Training |

MLE.4 |

Machine Learning Model Testing |

The Hardware Engineering process group (HWE) consists of processes addressing the management of hardware requirements derived from the system requirements, the development of the corresponding hardware architecture and design as well as the verification of the hardware.

HWE.1 |

Hardware Requirements Analysis |

HWE.2 |

Hardware Design |

HWE.3 |

Verification against Hardware Design |

HWE.4 |

Verification against Hardware Requirements |

3.1.2. Supporting life cycle processes category

The supporting life cycle processes category consists of processes that may be employed by any of the other processes at various points in the life cycle.

SUP.1 |

Quality Assurance |

SUP.8 |

Configuration Management |

SUP.9 |

Problem Resolution Management |

SUP.10 |

Change Request Management |

SUP.11 |

Machine Learning Data Management |

3.1.3. Organizational life cycle processes category

The organizational life cycle processes category consists of processes that develop process, product, and resource assets which, when used by projects in the organization, may help the organization achieve its business goals.

The organizational life cycle processes category consists of the following groups:

the Management process group;

the Process Improvement process group;

the Reuse process group.

The Management process group (MAN) consists of processes that may be used by anyone who manages any type of project or process within the life cycle.

MAN.3 |

Project Management |

MAN.5 |

Risk Management |

MAN.6 |

Measurement |

The Process Improvement process group (PIM) covers one process that contains practices to improve the processes performed in the organizational unit.

PIM.3 |

Process Improvement |

The Reuse process group (REU) covers one process to systematically exploit reuse opportunities in organization’s product portfolio.

REU.2 |

Management of Products for Reuse |

3.2. Measurement framework

The measurement framework provides the necessary requirements and rules for the capability dimension. It defines a schema which enables an assessor to determine the Capability Level of a given process. These capability levels are defined as part of the measurement framework.

To enable the rating, the measurement framework provides process attributes defining a measurable property of process capability. Each process attribute is assigned to a specific capability level. The extent of achievement of a certain process attribute is represented by means of a rating based on a defined rating scale. The rules from which an assessor can derive a final capability level for a given process are represented by a process capability level model.

Automotive SPICE defines its own measurement framework.

Note: The Automotive SPICE measurement framework is an adaption of ISO/IEC 33020:2019. Text incorporated from ISO/IEC 33020 within this chapter is written in italic font and marked with a left side bar.

3.2.1. Process capability levels and process attributes

The process capability levels, and associated process attributes are described in detail in chapter 5.

Process attributes are features of a process that can be evaluated on a scale of achievement, providing a measurement of the capability of the process. They are applicable to all processes.

A capability level is characterized by one or more process attributes whose implementation result in a significant improvement in the capability to perform a process. Each attribute addresses a specific aspect of the capability level. The levels constitute a rational way of progressing through improvement of the capability of any process.

There are six capability levels as listed in Table 14, incorporating nine process attributes:

Level 0: Incomplete process |

The process is not implemented or fails to achieve its process purpose. |

Level 1: Performed process |

The implemented process achieves its process purpose |

Level 2: Managed process |

The previously described performed process is now implemented in a managed fashion (planned, monitored and adjusted) and its work products are appropriately established, controlled and maintained. |

Level 3: Established process |

The previously described managed process is now implemented using a defined process that is capable of achieving its process outcomes. |

Level 4: Predictable process |

The previously described established process now operates predictively within defined limits to achieve its process outcomes. Quantitative management needs are identified, measurement data are collected and analyzed to identify assignable causes of variation. Corrective action is taken to address assignable causes of variation. |

Level 5: Innovating process |

The previously described predictable process is now continually improved to respond to organizational change. |

Within this process assessment model, the determination of capability is based upon the nine process attributes (PA) as listed in Table 15 — Process attributes.

Attribute ID |

Process Attributes |

|---|---|

Level 0: Incomplete process |

|

Level 1: Performed process |

|

PA 1.1 |

Process performance process attribute |

Level 2: Managed process |

|

PA 2.1 |

Performance management process attribute |

PA 2.2 |

Work product management process attribute |

Level 3: Established process |

|

PA 3.1 |

Process definition process attribute |

PA 3.2 |

Process deployment process attribute |

Level 4: Predictable process |

|

PA 4.1 |

Quantitative analysis process attribute |

PA 4.2 |

Quantitative control process attribute |

Level 5: Innovating process |

|

PA 5.1 |

Process innovation process attribute |

PA 5.2 |

Process innovation implementation process attribute |

3.2.2. Process attribute rating

To support the rating of process attributes, the measurement framework provides a defined rating scale with an option for refinement, different rating methods and different aggregation methods depending on the class of the assessment (e.g., required for organizational maturity assessments).

3.2.2.1. Rating scale

Within this process measurement framework, a process attribute is a measureable property of process capability. A process attribute rating is a judgement of the degree of achievement of the process attribute for the assessed process.

The rating scale is shown in Table 16 — Rating scale.

Note. The rating scale is identical to ISO/IEC 33020:2019

N |

Not achieved |

There is little or no evidence of achievement of the defined process attribute in the assessed process. |

P |

Partially achieved |

There is some evidence of an approach to, and some achievement of, the defined process attribute in the assessed process. Some aspects of achievement of the process attribute may be unpredictable. |

L |

Largely achieved |

There is evidence of a systematic approach to, and significant achievement of, the defined process attribute in the assessed process. Some weaknesses related to this process attribute may exist in the assessed process. |

F |

Fully achieved |

There is evidence of a complete and systematic approach to, and full achievement of, the defined process attribute in the assessed process. No significant weaknesses related to this process attribute exist in the assessed process. |

The ordinal scale defined above shall be understood in terms of percentage achievement of a process attribute. The corresponding percentages shall be:

N |

Not achieved |

0 to ≤ 15% achievement |

P |

Partially achieved |

> 15% to ≤ 50% achievement |

L |

Largely achieved |

> 50% to ≤ 85% achievement |

F |

Fully achieved |

> 85% to ≤ 100% achievement |

The ordinal scale may be further refined for the measures P and L as defined below.

P- |

Partially achieved: |

There is some evidence of an approach to, and some achievement of, the defined process attribute in the assessed process. Many aspects of achievement of the process attribute may be unpredictable. |

P+ |

Partially achieved: |

There is some evidence of an approach to, and some achievement of, the defined process attribute in the assessed process. Some aspects of achievement of the process attribute may be unpredictable. |

L- |

Largely achieved: |

There is evidence of a systematic approach to, and significant achievement of, the defined process attribute in the assessed process. Many weaknesses related to this process attribute may exist in the assessed process. |

L+ |

Largely achieved: |

There is evidence of a systematic approach to, and significant achievement of, the defined process attribute in the assessed process. Some weaknesses related to this process attribute may exist in the assessed process. |

The corresponding percentages shall be:

P- |

Partially achieved - |

> 15% to ≤ 32.5% achievement |

P+ |

Partially achieved + |

> 32.5 to ≤ 50% achievement |

L- |

Largely achieved - |

> 50% to ≤ 67.5% achievement |

L+ |

Largely achieved + |

> 67.5% to ≤ 85% achievement |

3.2.3. Rating and aggregation method

Rating and aggregation methods are taken from ISO/IEC 33020:2019, which provides the following definitions:

A process outcome is the observable result of successful achievement of the process purpose.

A process attribute outcome is the observable result of achievement of a specified process attribute.

Process outcomes and process attribute outcomes may be characterised as an intermediate step to providing a process attribute rating.

When performing rating, the rating method employed shall be specified relevant to the class of assessment. The following rating methods are defined.

The use of rating method may vary according to the class, scope and context of an assessment. The lead assessor shall decide which (if any) rating method to use. The selected rating method(s) shall be specified in the assessment input and referenced in the assessment report.

ISO/IEC 33020:2019 provides the following 3 rating methods: Rating method R1

The approach to process attribute rating shall satisfy the following conditions:

Each process outcome of each process within the scope of the assessment shall be characterized for each process instance, based on validated data;

Each process attribute outcome of each process attribute for each process within the scope of the assessment shall be characterized for each process instance, based on validated data;

Process outcome characterizations for all assessed process instances shall be aggregated to provide a process performance attribute achievement rating;

Process attribute outcome characterizations for all assessed process instances shall be aggregated to provide a process attribute achievement rating.

Rating method R2

The approach to process attribute rating shall satisfy the following conditions:

Each process attribute for each process within the scope of the assessment shall be characterized for each process instance, based on validated data;

Process attribute characterizations for all assessed process instances shall be aggregated to provide a process attribute achievement rating.

Rating method R3

Process attribute rating across assessed process instances shall be made without aggregation.

In principle the three rating methods defined in ISO/IEC 33020:2019 depend on

whether the rating is made only on process attribute level (Rating method 3 and 2) or – with more level of detail – both on process attribute and process attribute outcome level (Rating method 1); and

the type of aggregation ratings across the assessed process instances for each process

If a rating is performed for both process attributes and process attribute outcomes (Rating method 1), the result will be a process performance attribute outcome rating on level 1 and a process attribute achievement rating on higher levels.

Depending on the class, scope and context of the assessment an aggregation within one process (one-dimensional, vertical aggregation), across multiple process instances (one-dimensional, horizontal aggregation) or both (two-dimensional, matrix aggregation) is performed.

ISO/IEC 33020:2019 provides the following examples:

When performing an assessment, ratings may be summarized across one or two dimensions.

For example, when rating a

process attribute for a given process, one may aggregate ratings of the associated process (attribute) outcomes – such an aggregation will be performed as a vertical aggregation (one dimension).

process (attribute) outcome for a given process attribute across multiple process instances, one may aggregate the ratings of the associated process instances for the given process (attribute) outcome such an aggregation will be performed as a horizontal aggregation (one dimension)

process attribute for a given process, one may aggregate the ratings of all the process (attribute) outcomes for all the processes instances – such an aggregation will be performed as a matrix aggregation across the full scope of ratings (two dimensions)

The standard defines different methods for aggregation. Further information can be taken from ISO/IEC 33020:2019.

3.2.4. Process capability level model

The process capability level achieved by a process shall be derived from the process attribute ratings for that process according to the process capability level model defined in Table 20 — Capability levels.

The process capability level model defines the rules how the achievement of each level depends on the rating of the process attributes for the assessed and all lower levels.

As a general rule the achievement of a given level requires a largely or fully achievement of the corresponding process attributes and a full achievement of any lower lying process attribute.

Scale |

Process attribute |

Rating |

Level 1 |

PA 1.1: Process performance process attribute |

Largely or fully |

Level 2 |

PA 1.1: Process performance process attribute PA 2.1: Process performance management process attribute PA 2.2: Work product management process attribute |

Fully Largely or fully Largely or fully |

Level 3 |

PA 1.1: Process performance process attribute PA 2.1: Process performance management process attribute PA 2.2: Work product management process attribute PA 3.1: Process definition process attribute PA 3.2: Process deployment process attribute |

Fully Fully Fully Largely or fully Largely or fully |

Level 4 |

PA 1.1: Process performance process attribute PA 2.1: Process performance management process attribute PA 2.2: Work product management process attribute PA 3.1: Process definition process attribute PA 3.2: Process deployment process attribute PA 4.1: Quantitative analysis process attribute PA 4.2: Quantitative control process attribute |

Fully Fully Fully Fully Fully Largely or fully Largely or fully |

Level 5 |

PA 1.1: Process performance process attribute PA 2.1: Process performance management process attribute PA 2.2: Work product management process attribute PA 3.1: Process definition process attribute PA 3.2: Process deployment process attribute PA 4.1: Quantitative analysis process attribute PA 4.2: Quantitative control process attribute PA 5.1: Process innovation process attribute PA 5.2: Process innovation implementation process attribute |

Fully Fully Fully Fully Fully Fully Fully Largely or fully Largely or fully |

3.3. Process assessment model

The process assessment model offers indicators in order to identify whether the process outcomes and the process attribute outcomes (achievements) are present or absent in the instantiated processes of projects and organizational units. These indicators provide guidance for assessors in accumulating the necessary objective evidence to support judgments of capability. They are not intended to be regarded as a mandatory set of checklists to be followed.

3.3.1. Assessment indicators

According to ISO/IEC 33004, a process assessment model needs to define a set of assessment indicators:

Assessment Indicators

A process assessment model shall be based on a set of assessment indicators that:

explicitly address the purpose and process outcomes, as defined in the selected process reference model, of each of the processes within the scope of the process assessment model;

demonstrate the achievement of the process attributes within the scope of the process assessment model;

demonstrate the achievement (where relevant) of the process quality levels within the scope of the process assessment model.

The assessment indicators generally fall into three types:

practices that support achievement of either the process purpose or the specific process attribute.

information items and their characteristics that demonstrate the respective achievements.

resources and infrastructure that support the respective achievements. [ISO/IEC 33004:2015, 6.3.1]

In this assessment model, only practices and information items are used.

Practices are representing activity-oriented indicators, where information items are representing result-oriented indicators. Both practices and information items are used for judging objective evidence to be collected and accumulated in the performance of an assessment.

As a first type of assessment indicator, practices are provided, which can be divided into two types:

1. Base practices (BP), applying to capability level 1

They provide an indication of the extent of achievement of the process outcomes. Base practices relate to one or more process outcomes, thus being always process-specific and not generic.

2. Generic practices (GP), applying to capability levels 1 to 5

They provide an indication of the extent of process attribute achievement. Generic practices relate to one or more process attribute achievements, thus applying to any process.

As a second type of assessment indicators, information items (II) including their characteristics (IIC) are provided in Annex B.

These are meant to offer a good practice and state-of-the-art knowledge guide for the assessor. Therefore, information items including their characteristics are supposed to be a quickly accessible information source during an assessment.

Information item characteristics shall not be interpreted as a required structure of a corresponding work products, which is defined by the project and organization, respectively.

Please refer to chapter 3.3.2 for understanding the difference between information items and work products.

ISO 33004:2015 requires the mapping of assessment indicators to process attributes as shown in figure 3.

The capability of a process on level 1 is only characterized by the measure of the extent to which the process outcomes are achieved. According to ISO 33003:2015, a measurement framework requires each level to reveal a process attribute. Therefore, the only process performance attribute for capability Level 1 (PA.1.1) has a single generic practice (GP 1.1.1) pointing as an editorial reference to the respective process performance indicators (see figure 3 and chapter 4).

Figure 4 — Relationship between assessment indicators and process capability

The detailed mapping of base practices / indicators and generic practices / indicators to process outcomes and achievements, is provided in corresponding tables in chapter 4 and 5, respectively.

3.3.2. Understanding information Items and work products

In order to judge the presence or absence of process outcomes and process attribute achievements an assessment obtains objective evidence. All such evidence comes either from the examination of work products related to a specific output of the processes assessed, or from statements made by the performers and managers of the processes. Sources for such evidence is either repository content of the assessed processes, or testimony provided by the performers and managers of the assessed processes.

As described in chapter 3.3.1, this process assessment model provides information items serving as indicators to guide the assessor when judging a process attribute achievement.

3.3.2.1. Information items versus work products

ISO/IEC 33001 provides the following definition of the term “information item”:

information item

separately identifiable body of information that is produced, stored, and delivered for human use

Note 1 to entry: An information item can be produced in several versions during a system, software, or service life cycle. Syn: information product.

[ISO/IEC 33001:2015, 3.1.4]

Note: Human use includes the information stored, managed and processed by a tool.

One common definition of the term “work product” is:

work product

artifact resulting from the execution of a process

[ISO/IEC/IEEE 24765:2017]

Both terms are used in different context in an assessment:

Information items are defining relevant pieces of information used by the assessors to judge the achievement of process attributes.

Work products are produced by the organization assessed when performing, managing, establishing, analyzing and innovating processes.

Information items (together with their characteristics) are provided as guidance for “what to look for” when examining the work products available in the assessed organization. The extent of implementation of an information item (in line with its defined characteristics) in a related work product serves as objective evidence supporting the assessment of a particular process. A documented process and assessor judgment is needed to ensure that the process context (application domain, business purpose, development methodology, size of the organization, etc.) is considered when using this information.

Information items shall therefore not be mistaken for the work product generated by the assessed organization itself. There is no 1:1 relationship between an information item and the work product taken as sample evidence by the assessor when assessing the achievement of a process outcome and process attribute achievements. An output generated by a process may comprise multiple information item characteristics and multiple outputs may also contain the same information item characteristics.

Information item characteristics should be considered as indicators when considering whether, given the context, a work product is contributing to the intended purpose of the process. Context-sensitivity means that assessor judgment is needed to ensure that the actual context (application domain, business purpose, development methodology, size of the organization, etc.) is considered when using the information items.

3.3.2.2. Types of work products

A work product to be considered as evidence when rating a process attribute may not necessary be outputs from the processes assessed but can also be originated from other processes of the organization. Once such a work product is used in the performance of a process under assessment, it may be considered by the assessor as objective evidence.

In a lot of cases work products are comprising documentation aspects, such as specifications, reports, records, architectural designs, software code etc.

Examples of work products not comprising any documentation aspects are software binaries, raw data, or a physical electronic hardware.

3.3.3. Understanding the level of abstraction of a PAM

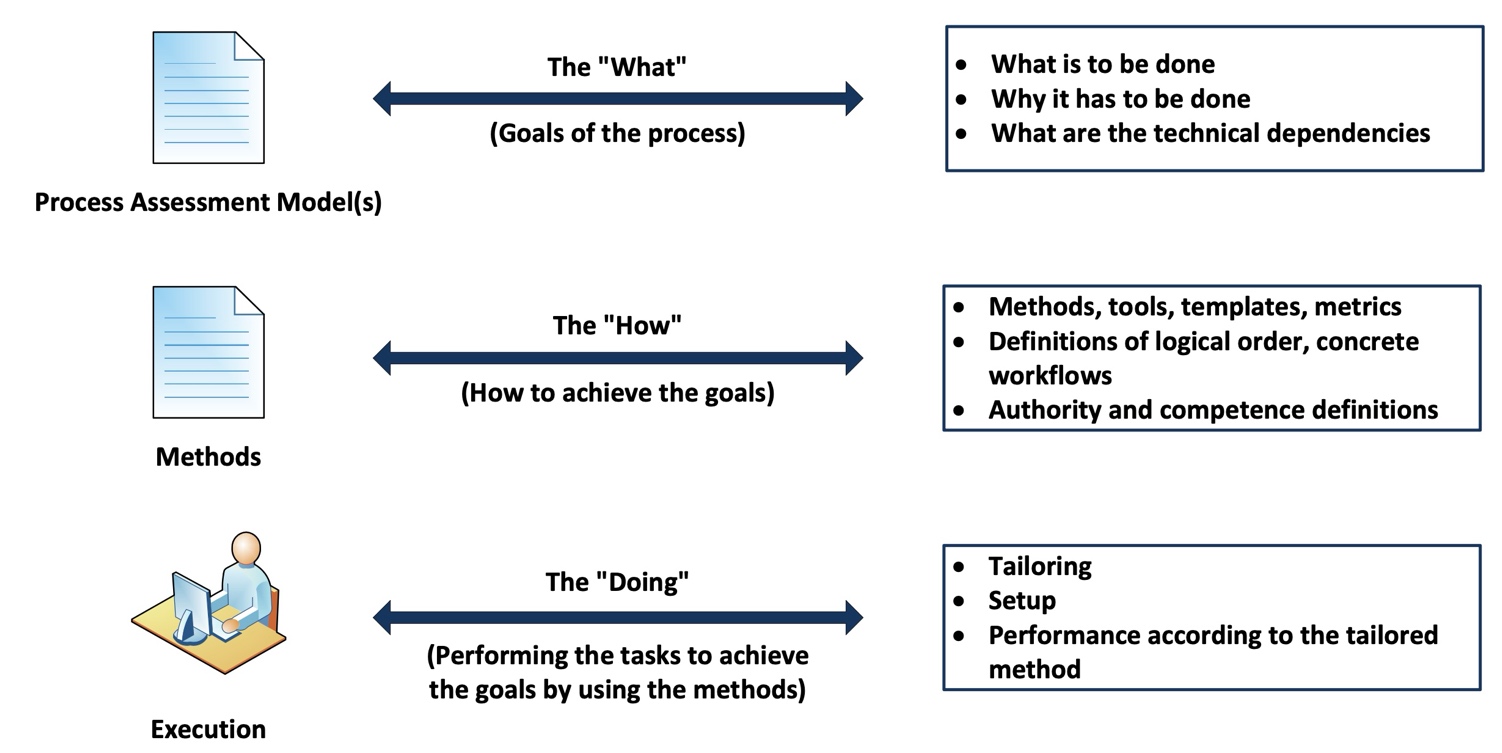

The term “process” can be understood at three levels of abstraction. Note that these levels of abstractions are not meant to define a strict black-or-white split, nor is it the aim to provide a scientific classification schema – the message here is to understand that, in practice, when it comes to the term “process” there are different abstraction levels, and that a PAM resides at the highest.

Figure 5 — Possible levels of abstraction for the term “process”

Capturing experience acquired during product development (i.e., at the DOING level) in order to share this experience with others means creating a HOW level. However, a HOW is always specific to a particular context such as a company, an organizational unit, or a product line. For example, the HOW of a project, organizational unit, or company A is potentially not applicable as is to a project, organizational unit, or company B. However, both might be expected to adhere the principles represented by PAM indicators for process outcomes and process attribute achievements. These indicators are at the WHAT level while deciding on solutions for concrete templates, proceedings, and tooling etc. is left to the HOW level.

3.3.4. Why a PRM and PAM are not a lifecycle model or development process blueprint

A lifecycle model defines phases and activities in a logical timely order, possibly including cycles or loops, and parallelization. For example, some standards such as ISO 26262 or ISO/SAE 21434 are centered around a lifecycle model (neither of these standards in fact represents a PRM according to ISO/IEC 33004). Companies, organizational units, or projects will interpret such general lifecycle models given in standards, and then detail it out into roles, organizational interactions and interfaces, tools or tool chains, work instructions, and artifacts. Lifecycle models therefore are a concept at the HOW level (see Section 3.3.3).

In contrast, a PRM/PAM according to ISO/IEC 33004 (formerly ISO/IEC 15504-2) is at the level of the WHAT by abstracting from any HOW level, see Figure 4 in Section 3.3.3. In Automotive SPICE®, this has been, and is, indicated by the process MAN.3 Project Management requiring in BP2 “Define project life cycle”. A PRM/PAM groups a set of coherent and related characteristics of a particular technical topic and calls it ‘process’. In different terms, a process in a PRM represents a ‘distinct conceptual silo’. In this respect, a PRM/PAM

neither predefines, nor discourages, any order in which PRM processes or Base Practices are to be performed. Ultimately, in Automotive SPICE consistency must be fulfilled as required by the traceability/consistency Base Practices in MAN.3 or SYS.x, SWE.x, and HWE.x;

does not predefine any particular work product structure, or work product blueprints. For example, the process SYS.2 does not mean that there shall be exactly one system requirements specification containing everything provided by the stakeholders.

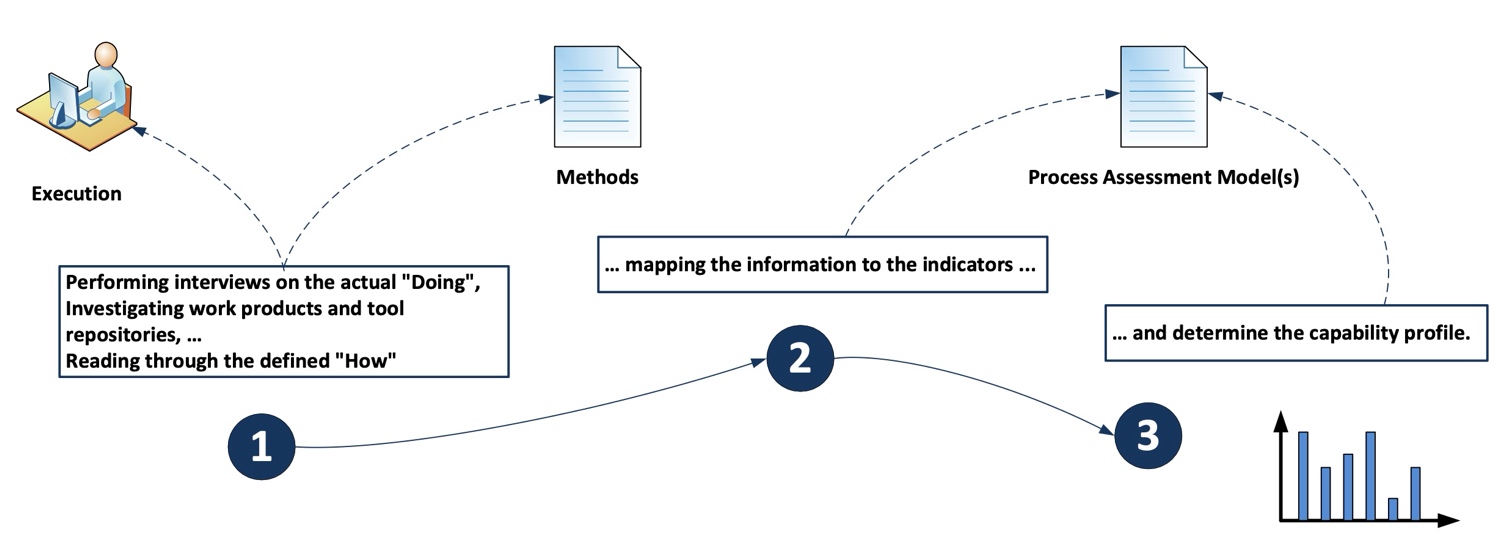

As a consequence, it is the assessor’s responsibility to perform a mapping of elements in such a HOW level to the Assessment Indicators in the PAM, see Figure 5.

Figure 6 — Performing a process assessment for determining process capability

In this respect, a PRM or PAM further is not supposed to represent a product element hierarchy either.

4. Process reference model and performance indicators (Level 1)

The processes in the process dimension can be drawn from the Automotive SPICE process reference model, which is incorporated in the tables below indicated by a red bar at the left side.

Each table related to one process in the process dimension contains the process reference model (indicated by a red bar) and the process performance indicators necessary to define the process assessment model. The process performance indicators consist of base practices (indicated by a green bar) and output information items (indicated by a blue bar).

Process reference model |

|

The individual processes are identified with a unique process identifier and a process name. A process purpose statement is provided, and process outcomes are defined to represent the process dimension of the Automotive SPICE process reference model. The background coloring of process ID’s and names are indicating the assignment to the corresponding process group. |

Process performance indicators |

|

A set of base practices for the process providing a definition of the activities to be performed to accomplish the process purpose and fulfill the process outcomes. The base practice headers are summarized at the end of a process to demonstrate their relationship to the process outcomes. |

|

The output information items that are relevant to accomplish the process purpose and fulfill the process outcomes summarized at the end of a process to demonstrate their relationship to the process outcomes.

|

4.1. Acquisition process group (ACQ)

4.1.1. ACQ.4 Supplier Monitoring

Process ID |

ACQ.4 |

Process name |

Supplier Monitoring |

Process purpose |

The purpose is to track and assess the performance of an external contract-based supplier company against agreed commitments. |

Process outcomes |

|

Base Practices |

|---|

ACQ.4.BP1: Agree on and maintain joint activities, joint interfaces, and information to be exchanged. Establish and maintain an agreement on information to be exchanged, on joint activities, joint interfaces, responsibilities, type and frequency of joint activities, communications, meetings, status reports, and reviews. |

ACQ.4.BP2: Exchange all agreed information. Use the defined joint interfaces between customer and supplier for the exchange of all agreed information. |

ACQ.4.BP3: Review development work products with the supplier. Review development work products with the supplier on the agreed regular basis, covering technical aspects, problems and risks. Track open measures.

|

ACQ.4.BP4: Review progress of the supplier. Review progress of the supplier regarding schedule, quality, and cost on the agreed regular basis. Track open measures to closure and perform risk mitigation activities.

|

ACQ.4.BP5: Act to correct deviations. Take action when agreed objectives are not achieved. Negotiate changes to objectives and document them in the agreements. |

ACQ.4 Supplier Monitoring |

Outcome 1 |

Outcome 2 |

Outcome 3 |

Outcome 4 |

|---|---|---|---|---|

Output Information Items |

||||

02-01 Commitment/Agreement |

X |

X |

X |

X |

13-52 Communication evidence |

X |

X |

X |

|

13-09 Meeting support evidence |

X |

X |

||

13-14 Progress status |

X |

X |

||

13-16 Change request |

X |

|||

13-19 Review evidence |

X |

|||

14-02 Corrective action |

X |

|||

15-51 Analysis results |

X |

|||

Base Practices |

||||

BP1: Agree on and maintain joint processes, joint interfaces, and information to be exchanged |

X |

X |

X |

|

BP2: Exchange all agreed information |

X |

X |

X |

|

BP3: Review development work products with the supplier |

X |

X |

X |

|

BP4: Review progress of the supplier |

X |

X |

X |

|

BP5: Act to correct deviations |

X |

X |

4.2. Supply process group (SPL)

4.2.1. SPL.2 Product Release

Process ID |

|---|

SPL.2 |

Process name |

Product Release |

Process purpose |

The purpose is to control the release of a product to the intended customer. |

Process outcomes |

|

Base Practices |

|---|

SPL.2.BP1: Define the functional content of releases. Define the functionality to be included and the release criteria for each release.

|

SPL.2.BP2: Define release package. Define the release as well as supporting tools and information.

|

SPL.2.BP3: Ensure unique identification of releases. Ensure a unique identification of the release based upon the intended purpose and expectations of the release.

|

SPL.2.BP4: Build the release from items under configuration control. Build the release from items under configuration control to ensure integrity.

|

SPL.2.BP5: Ensure release approval before delivery. Criteria for the release are satisfied before delivery takes place. |

SPL.2.BP6: Provide a release note. A release is accompanied by information detailing key characteristics of the release.

|

SPL2.BP7: Communicate the type, service level and duration of support for a release. Identify and communicate the type, service level and duration of support for a release. |

SPL.2.BP8: Deliver the release package to the intended customer. Deliver the release package to the intended customer.

|

SPL.2 Product Release |

Outcome 1 |

Outcome 2 |

Outcome 3 |

Outcome 4 |

Outcome 5 |

|---|---|---|---|---|---|

Output Information Items |

|||||

11-03 Release note |

X |

X |

X |

X |

|

11-04 Product release package |

X |

X |

|||

13-06 Delivery evidence |

X |

X |

|||

13-13 Product release approval |

X |

X |

|||

18-06 Product release criteria |

X |

X |

X |

||

Base Practices |

|||||

BP1: Define the functional content of releases |

X |

||||

BP2: Define release package |

X |

||||

BP3: Establish a product release classification and numbering scheme |

X |

||||

BP4: Build the release from configured items |

X |

||||

BP5: Ensure product release approval before delivery |

X |

||||

BP6: Provide a release note |

X |

X |

|||

BP7: Communicate the type, service level and duration of support for a release |

X |

X |

|||

BP8: Deliver the release package to the intended customer |

X |

4.3. System engineering process group (SYS)

4.3.1. SYS.1 Requirements Elicitation

Process ID |

|---|

SYS.1 |

Process name |

Requirements Elicitation |

Process purpose |

The purpose is to gather, analyze, and track evolving stakeholder needs and requirements throughout the lifecycle of the product and/or service to establish a set of agreed requirements. |

Process outcomes |

|

Base Practices |

|---|

SYS.1.BP1: Obtain stakeholder expectations and requests. Obtain and define stakeholder expectations and requests through direct solicitation of stakeholder input, and through review of stakeholder business proposals (where relevant) and other documents containing inputs to stakeholder requirements, and consideration of the target operating and hardware environment.

|

SYS.1.BP2: Agree on requirements. Formalize the stakeholder’s expectations and requests into requirements. Reach a common understanding of the set of stakeholder requirements among affected parties by obtaining an explicit agreement from all affected parties.

|

SYS.1.BP3: Analyze stakeholder requirements changes. Analyze all changes made to the stakeholder requirements against the agreed stakeholder requirements. Assess the impact and risks, and initiate appropriate change control and mitigation actions.

|

SYS.1.BP4: Communicate requirements status. Ensure all affected parties can be aware of the status and disposition of their requirements including changes and can communicate necessary information and data. |

SYS.1 Requirements Elicitation |

Outcome 1 |

Outcome 2 |

Outcome 3 |

Outcome 4 |

|---|---|---|---|---|

Output Information Items |

||||

15-51 Analysis Results |

X |

|||

13-52 Communication Evidence |

X |

X |

||

17-00 Requirement |

X |

|||

17-54 Requirement Attribute |

X |

X |

X |

|

Base Practices |

||||

BP1: Obtain stakeholder expectations and requests |

X |

|||

BP2: Agree on requirements |

X |

|||

BP3: Analyze stakeholder requirements changes |

X |

|||

BP4: Communicate requirements status |

X |

X |

4.3.2. SYS.2 System Requirements Analysis

Process ID |

|---|

SYS.2 |

Process name |

System Requirements Analysis |

Process purpose |

The purpose is to establish a structured and analyzed set of system requirements consistent with the stakeholder requirements. |

Process outcomes |

|

Base Practices |

|---|

SYS.2.BP1: Specify system requirements. Use the stakeholder requirements to identify and document the functional and non-functional requirements for the system according to defined characteristics for requirements.

|

SYS.2.BP2: Structure system requirements. Structure and prioritize the system requirements.

|

SYS.2.BP3: Analyze system requirements. Analyze the specified system requirements including their interdependencies to ensure correctness, technical feasibility, and to support project management regarding project estimates.

|

SYS.2.BP4: Analyze the impact on the system context. Analyze the impact that the system requirements will have on elements in the relevant system context. |

SYS.2.BP5: Ensure consistency and establish bidirectional traceability. Ensure consistency and establish bidirectional traceability between system requirements and stakeholder requirements.

|

SYS.2.BP6: Communicate agreed system requirements and impact on the system context. Communicate the agreed system requirements, and results of the impact analysis on the system context, to all affected parties. |

SYS.2 System Requirements Analysis |

Outcome 1 |

Outcome 2 |

Outcome 3 |

Outcome 4 |

Outcome 5 |

Outcome 6 |

|---|---|---|---|---|---|---|

Output Information Items |

||||||

17-00 Requirement |

X |

X |

||||

17-54 Requirement Attribute |

X |

X |

||||

15-51 Analysis Results |

X |

X |

||||

13-51 Consistency Evidence |

X |

|||||

13-52 Communication Evidence |

X |

|||||

Base Practices |

||||||

BP1: Specify system requirements |

X |

|||||

BP2: Structure system requirements |

X |

|||||

BP3: Analyze system requirements |

X |

|||||

BP4: Analyze the impact on the system context |

X |

|||||

BP5: Ensure consistency and establish bidirectional traceability |

X |

|||||

BP6: Communicate agreed system requirements and impact on the system context |

X |

4.3.3. SYS.3 System Architectural Design

Process ID |

|---|

SYS.3 |

Process name |

System Architectural Design |

Process purpose |

The purpose is to establish an analyzed system architecture, comprising static and dynamic aspects, consistent with the system requirements. |

Process outcomes |

|

Base Practices |

|---|

SYS.3.BP1: Specify static aspects of the system architecture. Specify and document the static aspects of the system architecture with respect to the functional and non-functional system requirements, including external interfaces and a defined set of system elements with their interfaces and relationships. |

SYS.3.BP2: Specify dynamic aspects of the system architecture. Specify and document the dynamic aspects of the system architecture with respect to the functional and non-functional system requirements including the behavior of the system elements and their interaction in different system modes.

|

SYS.3.BP3: Analyze system architecture. Analyze the system architecture regarding relevant technical design aspects related to the product lifecycle, and to support project management regarding project estimates, and derive special characteristics for non-software system elements. Document a rationale for the system architectural design decisions.

|

SYS.3.BP4: Ensure consistency and establish bidirectional traceability. Ensure consistency and establish bidirectional traceability between the elements of the system architecture and the system requirements that represent properties or characteristics of the physical end product.

|

SYS.3.BP5: Communicate agreed system architecture. Communicate the agreed system architecture, including the special characteristics, to all affected parties. |

SYS.3 System Architectural Design |

Outcome 1 |

Outcome 2 |

Outcome 3 |

Outcome 4 |

|---|---|---|---|---|

Output Information Items |

||||

04-06 System Architecture |

X |

|||

13-51 Consistency Evidence |

X |

|||

13-52 Communication Evidence |

X |

|||

15-51 Analysis Results |

X |

|||

17-57 Special Characteristics |

X |

|||

Base Practices |

||||

BP1: Specify static aspects of system architecture |

X |

|||

BP2: Specify dynamic aspects of system architecture |

X |

|||

BP3: Analyze the system architecture |

X |

|||

BP4: Ensure consistency and establish bidirectional traceability |

X |

|||

BP5: Communicate agreed system architecture |

X |

4.3.4. SYS.4 System Integration and Integration Verification

Process ID |

|---|

SYS.4 |

Process name |

System Integration and Integration Verification |

Process purpose |

The purpose is to integrate systems elements and verify that the integrated system elements are consistent with the system architecture. |

Process outcomes |

|

Base Practices |

|---|

SYS.4.BP1: Specify verification measures for system integration. Specify the verification measures, based on a defined sequence and preconditions for the integration of system elements against the system static and dynamic aspects of the system architecture, including

|

SYS.4.BP2: Select verification measures. Document the selection of verification measures for each integration step considering selection criteria including criteria for regression verification. The documented selection of verification measures shall have sufficient coverage according to the release scope.

|

SYS.4.BP3: Integrate system elements and perform integration verification. Integrate the system elements until the system is fully integrated according to the specified interfaces and interactions between the system elements, and according to the defined sequence and defined preconditions. Perform the selected system integration verification measures. Record the verification measure data including pass/fail status and corresponding verification measure data.

|

SYS.4.BP4: Ensure consistency and establish bidirectional traceability. Ensure consistency and establish bidirectional traceability between verification measures and the system architecture. Establish bidirectional traceability between verification results and verification measures.

|

SYS.4.BP5: Summarize and communicate results. Summarize the system integration and integration verification results and communicate them to all affected parties.

|

SYS.4 System Integration and Integration Verification |

Outcome 1 |

Outcome 2 |

Outcome 3 |

Outcome 4 |

Outcome 5 |

Outcome 6 |

Outcome 7 |

|---|---|---|---|---|---|---|---|

Output Information Items |

|||||||

08-60 Verification Measure |

X |

||||||

06-50 Integration Sequence Instruction |

X |

||||||

03-50 Verification Measure Data |

X |

||||||

08-58 Verification Measure Selection Set |

X |

||||||

15-52 Verification Results |

X |

||||||

13-51 Consistency Evidence |

X |

X |

|||||

13-52 Communication Evidence |

X |

||||||

11-06 Integrated System |

X |

||||||

Base Practices |

|||||||

BP1: Specify verification measures for system integration |

X |

||||||

BP2: Select verification measures |

X |

||||||

BP3: Integrate system elements and perform integration verification. |

X |

X |

|||||

BP4: Ensure consistency and establish bidirectional traceability |

X |

X |

|||||

BP5: Summarize and communicate results |

X |

4.3.5. SYS.5 System Verification

Process ID |

|---|

SYS.5 |

Process name |

System Verification |

Process purpose |

The purpose is to ensure that the system is verified to be consistent with the system requirements. |

Process outcomes |

|

Base Practices |

|---|

SYS.5.BP1: Specify verification measures for system verification. Specify the verification measures for system verification suitable to provide evidence for compliance with the functional and non-functional information in the system requirements, including

|

SYS.5.BP2: Select verification measures. Document the selection of verification measures considering selection criteria including criteria for regression verification. The selection of verification measures shall have sufficient coverage according to the release scope.

|

SYS.5.BP3: Perform verification of the integrated system. Perform the verification of the integrated system using the selected verification measures. Record the verification results including pass/fail status and corresponding verification measure data.

|

SYS.5.BP4: Ensure consistency and establish bidirectional traceability. Ensure consistency and establish bidirectional traceability between verification measures and system requirements. Establish bidirectional traceability between verification results and verification measures.

|

SYS.5.BP5: Summarize and communicate results. Summarize the system verification results and communicate them to all affected parties.

|

SYS.5 System Verification |

Outcome 1 |

Outcome 2 |

Outcome 3 |

Outcome 4 |

Outcome 5 |

Outcome 6 |

|---|---|---|---|---|---|---|

Output Information Item |

||||||

08-60 Verification Measure |

X |

|||||

03-50 Verification Measure Data |

X |

|||||

08-58 Verification Measure Selection Set |

X |

|||||

15-52 Verification Results |

X |

|||||

13-51 Consistency Evidence |

X |

X |

||||

13-52 Communication Evidence |

X |

|||||

Base Practices |

||||||

BP1: Specify verification measures for system verification |

X |

|||||

BP2: Select verification measures |

X |

|||||

BP3: Perform verification of the integrated system |

X |

|||||

BP4: Ensure consistency and establish bidirectional traceability. |

X |

X |

||||

BP5: Summarize and communicate results |

X |

4.4. Software engineering process group (SWE)

4.4.1. SWE.1 Software Requirements Analysis

Process ID |

|---|

SWE.1 |

Process name |

Software Requirements Analysis |

Process purpose |

The purpose is to establish a structured and analyzed set of software requirements consistent with the system requirements, and the system architecture. |

Process outcomes |

|

Base Practices |

|---|

SWE.1.BP1: Specify software requirements. Use the system requirements and the system architecture to identify and document the functional and non-functional requirements for the software according to defined characteristics for requirements.

|

SWE.1.BP2: Structure software requirements. Structure and prioritize the software requirements.

|

SWE.1.BP3: Analyze software requirements. Analyze the specified software requirements including their interdependencies to ensure correctness, technical feasibility, and to support project management regarding project estimates.

|

SWE.1.BP4: Analyze the impact on the operating environment. Analyze the impact that the software requirements will have on elements in the operating environment. |

SWE.1.BP5: Ensure consistency and establish bidirectional traceability. Ensure consistency and establish bidirectional traceability between software requirements and system architecture. Ensure consistency and establish bidirectional traceability between software requirements and system requirements.

|

SWE.1.BP6: Communicate agreed software requirements and impact on the operating environment. Communicate the agreed software requirements, and the results of the analysis of impact on the operating environment, to all affected parties. |

SWE.1 Software Requirements Analysis |

Outcome 1 |

Outcome 2 |

Outcome 3 |

Outcome 4 |

Outcome 5 |

Outcome 6 |

Outcome 7 |

|---|---|---|---|---|---|---|---|

Output Information Items |

|||||||

17-00 Requirement |

X |

X |

|||||

17-54 Requirement Attribute |

X |

||||||

15-51 Analysis Results |

X |

X |

|||||

13-51 Consistency Evidence |

X |

X |

|||||

13-52 Communication Evidence |

X |

||||||

Base Practices |

|||||||

BP1: Specify software requirements |

X |

||||||

BP2: Structure software requirements |

X |

||||||

BP3: Analyze software requirements |

X |

||||||

BP4: Analyze the impact on the operating environment |

X |

||||||

BP5: Ensure consistency and establish bidirectional traceability |

X |

X |

|||||

BP6: Communicate agreed software requirements and impact on the operating environment |

X |

4.4.2. SWE.2 Software Architectural Design

Process ID |

|---|

SWE.2 |

Process name |

Software Architectural Design |

Process purpose |

The purpose is to establish an analyzed software architecture, comprising static and dynamic aspects, consistent with the software requirements. |

Process outcomes |

|

Base Practices |

|---|

SWE.2.BP1: Specify static aspects of the software architecture. Specify and document the static aspects of the software architecture with respect to the functional and non-functional software requirements, including external interfaces and a defined set of software components with their interfaces and relationships.

|

SWE.2.BP2: Specify dynamic aspects of the software architecture. Specify and document the dynamic aspects of the software architecture with respect to the functional and nonfunctional software requirements, including the behavior of the software components and their interaction in different software modes, and concurrency aspects.

|

SWE.2.BP3: Analyze software architecture. Analyze the software architecture regarding relevant technical design aspects and to support project management regarding project estimates. Document a rationale for the software architectural design decision.

|

SWE.2.BP4: Ensure consistency and establish bidirectional traceability. Ensure consistency and establish bidirectional traceability between the software architecture and the software requirements.

|

SWE.2.BP5: Communicate agreed software architecture. Communicate the agreed software architecture to all affected parties. |

SWE.2 Software Architectural Design |

Outcome 1 |

Outcome 2 |

Outcome 3 |

Outcome 4 |

|---|---|---|---|---|

Output Information Items |

||||

04-04 Software Architecture |

X |

|||

13-51 Consistency Evidence |

X |

|||

13-52 Communication Evidence |

X |

|||

15-51 Analysis Results |

X |

|||

Base Practices |

||||

BP1: Specify static aspects of software architecture |

X |

|||

BP2: Specify dynamic aspects of software architecture |

X |

|||

BP3: Analyze software architecture |

X |

|||

BP4: Ensure consistency and establish bidirectional traceability |

X |